Semantic vs. SERP clustering in Python

Don’t waste thousands of dollars on SERP API credits to cluster a 100,000-keyword list when a local semantic model can handle the heavy lifting for free. If you are managing a massive WooCommerce catalog or a programmatic SEO project, the choice between semantic and SERP clustering isn’t just about accuracy – it’s about balancing compute cost against search intent precision. In my experience, most SEOs default to expensive SERP tools far too early in the research phase.

Semantic clustering is your tool for discovery and scale, while SERP clustering is your tool for final content architecture and preventing keyword cannibalization. I often see technical teams over-complicate this by trying to scrape Google for every long-tail variation, when they could have pruned 80% of the noise using a simple Python script and a pre-trained transformer model.

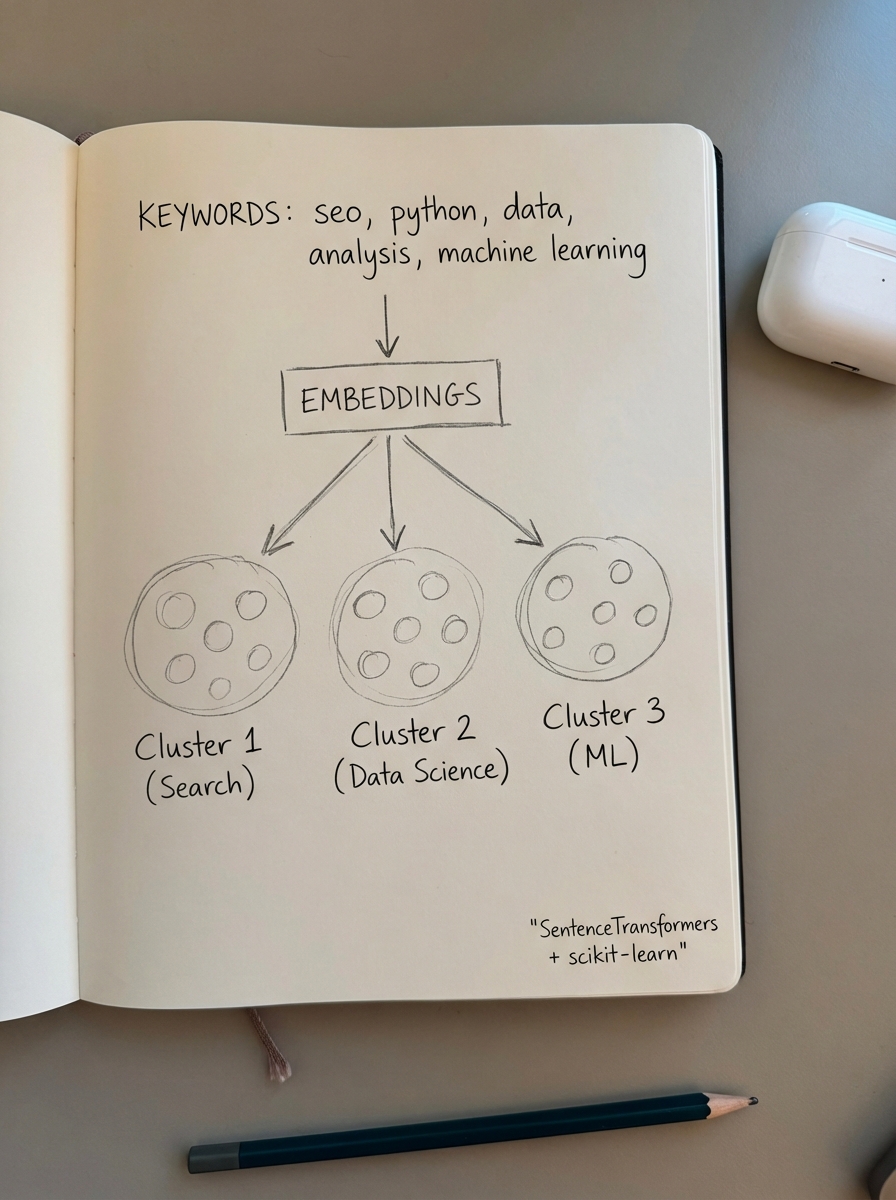

Semantic keyword clustering with SentenceTransformers

Semantic clustering uses Natural Language Processing (NLP) to group keywords based on their linguistic meaning. I find this approach most effective when I have a raw export of 50,000+ keywords and need to identify broad “topic buckets” without spending a dime on search APIs. The core of this method relies on embeddings – mathematical vectors that represent the “meaning” of a word. By calculating the cosine similarity between these vectors, we can group keywords like “men’s running shoes” and “male athletic footwear” even if they don’t share a single word.

The primary objection to semantic clustering is its potential for “intent blindness.” A semantic model might group “how to roast coffee” with “buy roasted coffee” because the linguistic context is similar. However, from an SEO perspective, these represent two entirely different stages of the funnel. Despite this, the cost-effectiveness is hard to beat when you are dealing with enterprise-level datasets where API costs for SERP scraping would be prohibitive.

Python workflow for semantic clustering

To run this locally, you’ll need the sentence-transformers and scikit-learn libraries. I prefer using the all-MiniLM-L6-v2 model for SEO tasks because it is incredibly fast and efficient for short-form queries common in keyword research.

from sentence_transformers import SentenceTransformerfrom sklearn.cluster import AgglomerativeClusteringimport pandas as pdimport numpy as np

# Load your keyword datakeywords = ["men's running shoes", "male athletic footwear", "best marathon sneakers", "buy coffee beans", "organic espresso roast", "whole bean coffee"]

# Initialize the modelmodel = SentenceTransformer('all-MiniLM-L6-v2')

# Generate embeddingsembeddings = model.encode(keywords)

# Perform clustering# AgglomerativeClustering is used with a distance threshold# to avoid pre-defining the number of clusterscluster_model = AgglomerativeClustering( n_clusters=None, distance_threshold=0.45, metric='cosine', linkage='average')clusters = cluster_model.fit_predict(embeddings)

# Review resultsdf = pd.DataFrame({'keyword': keywords, 'cluster': clusters})print(df.sort_values('cluster'))This method provides a massive head start in keyword clustering with machine learning by organizing raw data into a manageable structure. It allows you to visualize the thematic density of your keyword list before you commit resources to specific content pieces.

SERP-based clustering for intent precision

SERP clustering is the gold standard for production-level SEO because it analyzes what Google actually displays to users. If two keywords share five or more URLs in the top 10 results, the search engine is signaling that those queries represent the same intent and should likely be targeted on the same page. I use SERP clustering specifically to decide whether a subtopic deserves its own unique URL or should be merged into a pillar page.

Some argue that SERP data is too volatile to build a long-term strategy, but I believe that reflecting actual search behavior is always superior to purely linguistic models. When entity-based keyword research reveals variations that seem semantically different, SERP clustering often acts as the final judge to prevent unnecessary content creation.

Calculating Jaccard similarity in Python

To perform SERP clustering at scale, you must first retrieve the top 10 URLs for each keyword. Once you have this data, you can calculate the Jaccard similarity to determine the overlap between keyword pairs.

def jaccard_similarity(list1, list2): intersection = len(set(list1).intersection(set(list2))) union = len(set(list1).union(set(list2))) return intersection / union

# Example SERP URLs for two keywordsserp_a = ["site.com/p1", "blog.com/a1", "news.com/x", "shop.com/y"]serp_b = ["site.com/p1", "blog.com/a1", "wiki.com/z", "shop.com/y"]

similarity = jaccard_similarity(serp_a, serp_b)print(f"SERP Overlap: {similarity:.2f}")

# Threshold check: If similarity > 0.4, consider grouping themIn a professional environment, you would build a graph where keywords are nodes and edges exist only where similarity exceeds your chosen threshold. For those who want to skip the manual coding and get straight to the architecture, using a free SERP keyword clustering tool can automate the scraping and community detection phases instantly.

Comparing the two methodologies

Choosing between these methods requires a clear understanding of your current project stage. Semantic clustering is best for large-scale ideation where you are dealing with 50,000 or more keywords. It is essentially free to run on your local hardware and offers extremely high speed, making it ideal for the initial discovery phase of a project. The trade-off is that it focuses on linguistic relevance rather than how a search engine actually groups topics.

SERP clustering is better suited for the final mapping of your site structure and individual content pieces. While it is slower due to API latency and carries a higher cost per keyword, its intent relevance is significantly higher. This makes it the superior choice for preventing internal competition and ensuring that every page on your site has a distinct purpose.

The hybrid workflow for enterprise scale

I rarely use just one method in isolation. The most efficient keyword research workflow optimization involves a two-step hybrid approach that captures the best of both worlds.

- Start with semantic pre-clustering to reduce a list of 100,000 keywords into a few hundred broad thematic buckets using the Python logic mentioned earlier.

- Identify the “head” keywords from each of those semantic buckets.

- Run those representative keywords through a SERP clustering tool to verify if those buckets should stay unified or be split into separate, intent-driven pages.

- Finalize your content map based on the validated SERP data.

This approach allows you to process enterprise-level datasets without the enterprise-level API bill, ensuring you only spend credits on the most important validation steps.

Implementing clusters into your content strategy

Once you have your clusters, the next step is moving from data to execution. At ContentGecko, we focus on turning these clusters into automated, catalog-synced content for WooCommerce stores. If your clustering reveals a high-intent group of keywords like “best organic coffee for cold brew,” you shouldn’t just write a one-off post. You should build a pillar-cluster architecture that connects those keywords to your specific product SKUs.

For those who want to accelerate the content production phase, the AI SEO content writer can take a cluster of keywords and generate comprehensive articles that respect the intent identified during your SERP analysis. This ensures that the technical precision of your clustering work isn’t lost during the writing process.

TL;DR

Semantic clustering in Python is the most efficient way to group massive keyword lists by meaning quickly and without cost. SERP clustering is essential for final intent mapping to avoid content cannibalization, though it requires paid API data. For optimal results, use semantic clustering to prune your raw list and then use the ContentGecko SERP clustering tool to finalize your content map based on real-time search engine behavior.