How to use LLM search to increase WooCommerce product discovery

LLM search is fundamentally changing how customers find products by replacing rigid keyword matching with semantic intent and retrieval-augmented generation (RAG). For WooCommerce merchants, this means that having a fast site is no longer enough; your catalog content must be structured as a high-fidelity data source that AI models can easily ingest, cite, and recommend to shoppers.

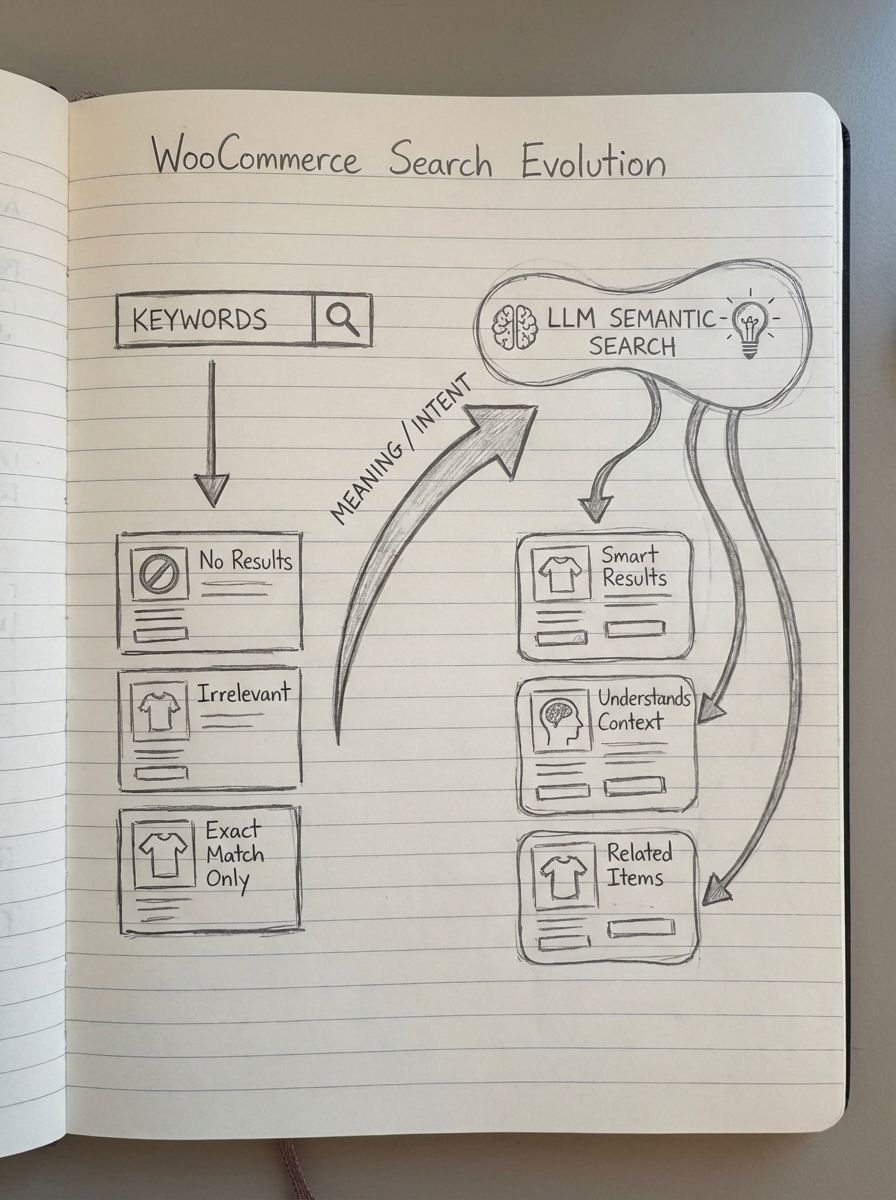

The shift from keyword matching to semantic retrieval

Traditional ecommerce search operates on exact string matches, which often leads to missed opportunities. If a user searches for “lightweight summer footwear” but your product is titled “Breathable Canvas Slip-on,” a standard MySQL search might miss the connection entirely. I’ve seen this lead to massive bounce rates even when the store had the exact inventory the customer wanted. The AI era solves this by moving toward effective metadata strategies for LLMO that capture the underlying meaning of a query rather than just the characters.

Instead of looking for letters, LLM-powered systems use mathematical representations of meaning known as vector embeddings. In our experience, retailers using semantic grouping for product descriptions have seen up to a 43% increase in organic traffic because the search engine understands that different terms often share the same conceptual intent. In the context of WooCommerce, this technology manifests in two primary ways:

- Replacing the default, limited search bar with LLM-powered plugins that understand natural language.

- Appearing in the generative search results of platforms like Google, Perplexity, or ChatGPT when users ask for specific product recommendations.

Why your WooCommerce catalog might be invisible to AI

Most WooCommerce stores suffer from a bloated architecture filled with duplicate pages or thin product descriptions that offer no context. When an LLM crawls your site, it looks for relationships between entities to build a knowledge graph. If your product page only contains a price and a basic bulleted list of specs, the AI lacks the connective tissue needed to recommend that product for complex or conversational user queries.

I have always argued that optimizing your ecommerce category names is significantly more important than tweaking individual product pages. Category pages provide the thematic structure and topical authority that LLMs require to categorize your store. An AI is rarely looking for a single SKU in isolation; it is looking for a comprehensive solution. A well-structured category page that explains why a group of products belongs together provides the semantic context necessary for a model to rank you as an authority. If your category names remain vague, such as “Accessories” instead of “Waterproof Hiking Accessories for Winter,” you are essentially hiding your inventory from modern LLM search engines.

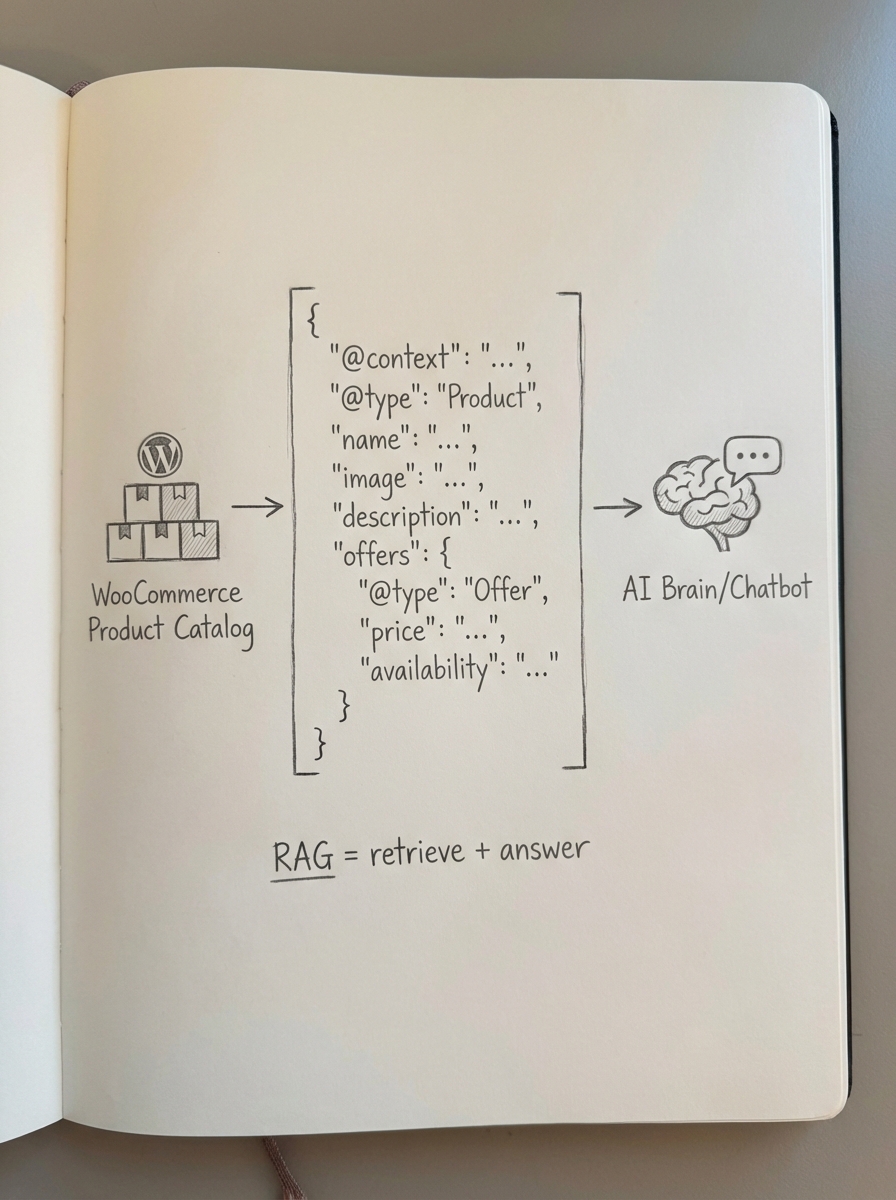

Structuring data for RAG and AI discovery

To win in the era of large language model optimization, you must treat your website like a structured knowledge base rather than a simple collection of HTML pages. This is where Retrieval-Augmented Generation (RAG) becomes the dominant framework. These systems retrieve specific facts from your site to generate a natural language answer for the user. We recommend a few specific technical shifts to ensure your data is retrieval-ready.

Implement deep schema markup

You cannot rely on search engines to guess what your product does or who it is for. You must provide explicit signals through structured schema markup on your category and blog pages. This makes your content extractable for AI models. When an LLM sees structured JSON-LD, it can cite your price, stock status, and expert advice with much higher accuracy. This transparency reduces the risk of AI hallucinations and ensures your brand is the one being cited in the response.

Move to a conversational content hierarchy

LLMs prioritize content that mimics human dialogue, making a Q&A structure highly effective. Instead of just listing features, we suggest using headers like “Who is this professional espresso machine for?” or “How does this moisturizer handle sensitive skin?” This alignment with how users interact with AI chatbots is a core part of adapting website architecture for LLM search. We have found that these question-based clusters significantly increase the likelihood of your content being featured in AI overviews.

Catalog-aware internal linking

AI crawlers use internal links to understand the hierarchy and commercial intent of your site. If your blog posts about seasonal trends do not link directly to the relevant product categories, the LLM may fail to connect your informational authority with your transactional products. At ContentGecko, we facilitate this by using our WordPress connector plugin to sync blog content with the WooCommerce catalog, creating a seamless path from a user’s initial question to the final purchase.

The role of catalog-aware content in discovery

The biggest opportunity in ecommerce SEO today is not technical tweaking, but producing high-quality, catalog-aware blog content. Many merchants make the mistake of writing generic thought leadership that has no connection to their actual SKUs. While this content might generate some traffic, it rarely helps with comparing traditional SEO vs LLMO techniques because it doesn’t solve the user’s ultimate problem of finding and buying the right product.

We focus on creating content that is synced directly to your inventory. This means that if a product goes out of stock or a price changes, the blog content reflecting those details updates automatically. This is critical because LLMs are increasingly sensitive to factual accuracy and real-time data. If an AI recommends a product from your site and the link is broken or the price is wrong, your authority score within that model’s index will likely drop, leading to lower visibility over time.

Measuring success in the new search landscape

Traditional metrics like keyword rankings are becoming less reliable as search becomes more personalized and conversational. Instead, the focus is shifting toward measuring user intent in search and tracking AI citation rates. You need to know if you are being mentioned when someone asks ChatGPT for the “best eco-friendly yoga mats” or if your category pages are appearing in Google’s AI-generated summaries.

![]()

You can track these new signals using tools for monitoring LLMO performance that specifically look for brand mentions across various language models. If you are just starting this transition, I recommend using our ecommerce SEO dashboard to identify which pages are already getting impressions. This allows you to prioritize high-potential URLs for FAQ additions and schema enhancements, ensuring you stay ahead of the curve as the search landscape continues to evolve.

TL;DR

- Optimize for the semantic meaning behind queries rather than just exact keyword matches to capture broader search intent.

- Prioritize the optimization of your category pages to provide the thematic context that LLMs use to rank your store as an authority.

- Use comprehensive schema markup to transform your product catalog into a reliable, extractable data source for RAG systems.

- Deploy catalog-aware blog content to ensure your store remains accurate and relevant in AI-generated product recommendations.

- ContentGecko automates the planning and publishing of catalog-synced content, helping you maintain visibility in both Google and LLM search results without the manual overhead.