Content update frequency for LLMO: How to stay visible in AI search

The era of “set it and forget it” SEO is dead; if your content isn’t refreshed to align with how Large Language Models (LLMs) retrieve information, your brand will effectively vanish from the conversational search results that now drive significant ecommerce traffic. In my experience working with WooCommerce merchants, the biggest misconception is that LLMs like ChatGPT or Perplexity are static databases. They are not. Through Retrieval-Augmented Generation (RAG), these models actively “search” the web to synthesize answers. If your content is stale, or if your publishing cadence is sporadic, you are essentially opting out of the 1,200% increase in AI-generated answer traffic we have seen over the last year.

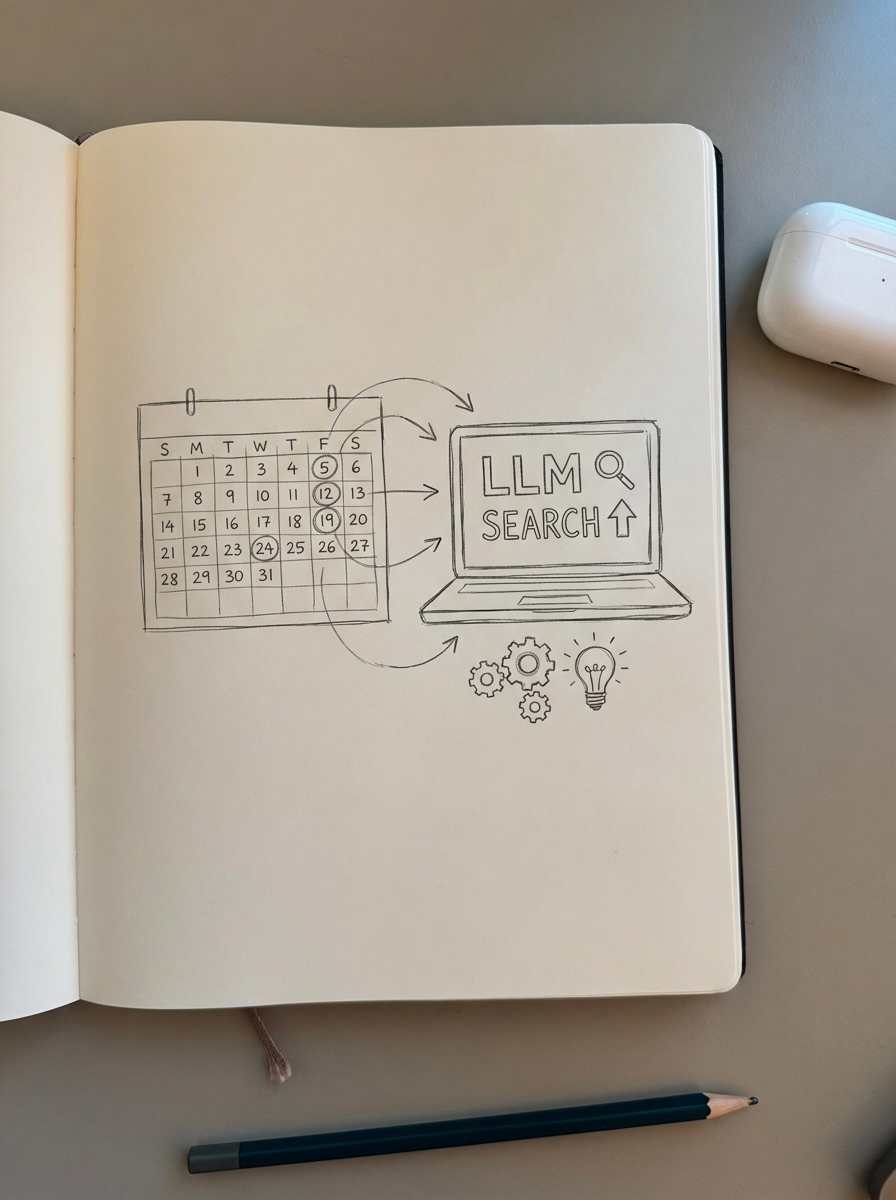

Why LLMs care about your publishing cadence

Traditional search engines index pages, but LLM search retrieves context. When a user asks Perplexity for the “best ergonomic office chairs for back pain,” the model does not just look for high-ranking keywords. It looks for the most relevant, recent, and authoritative data points it can find to build a response. Freshness is a core pillar of accuracy in this new landscape. If your competitor updated their buying guide last week and you haven’t touched yours in six months, the LLM will favor their data to avoid “hallucinating” outdated specs or prices.

We have found that content freshness is a critical ranking factor because RAG systems prioritize real-time or near-real-time sources to provide value to the user. This creates a feedback loop where models prefer sites that signal active maintenance. If your site structure feels abandoned, the model’s confidence in your data drops, leading to fewer citations and less visibility in AI-generated answers.

The optimal publishing frequency for LLMO

I often hear the objection that producing more content dilutes quality. While this is a valid concern for human-centric thought leadership, LLMs benefit from a high volume of specific, intent-driven content that covers every nook and cranny of a topic. This builds your “knowledge graph” in the eyes of the model. To compete, you must balance the creation of new assets with the surgical updating of existing ones.

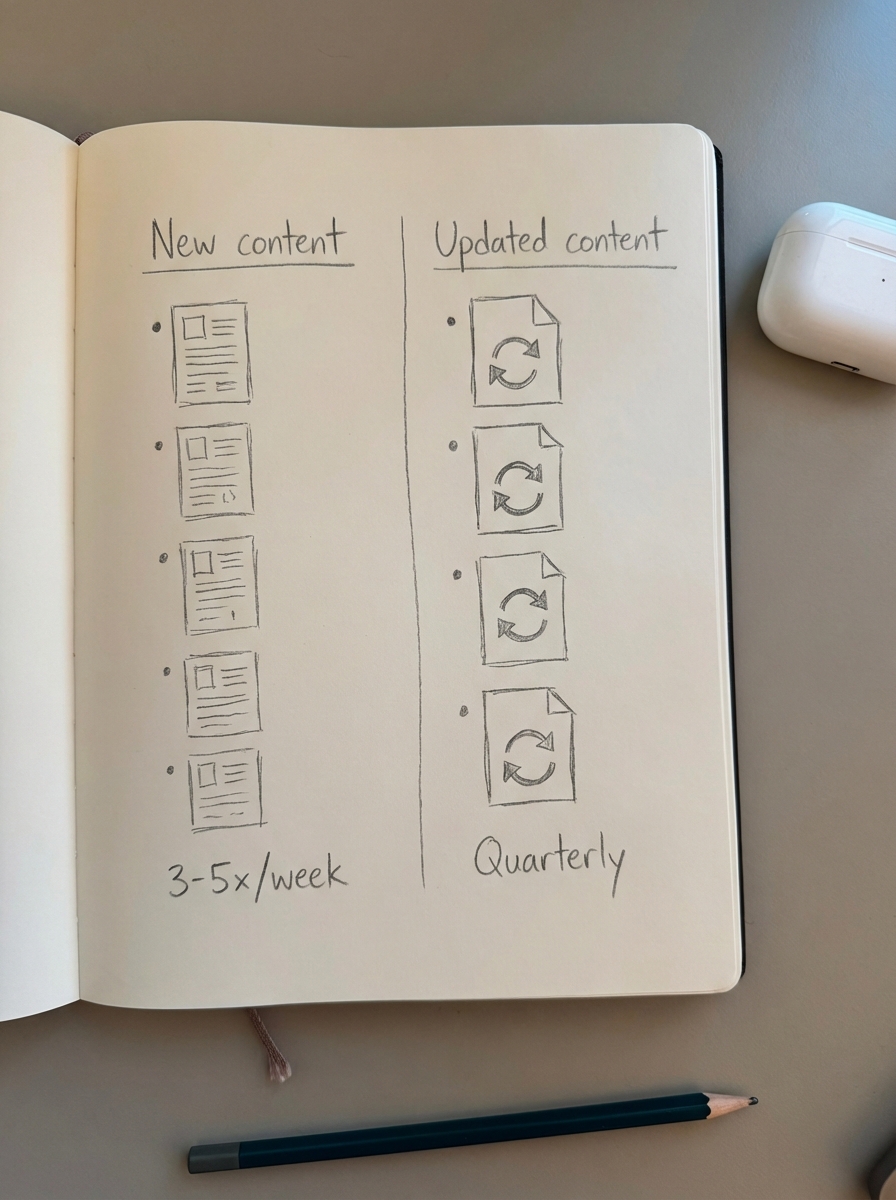

New content: The topical authority sprint

For a new WooCommerce store or a blog in its early stages, you need to publish frequently – think three to five times per week. The goal here is not just to rank on Google; it is to provide enough semantic data for LLMs to understand what your brand is an expert in. We recommend focusing on WooCommerce topic clusters rather than disparate keywords. By saturating a specific niche, such as “Organic Cotton Bedding,” you make it easier for an LLM to cite you as the definitive source for that category.

Updated content: The RAG accuracy loop

For established stores, updating existing content is often more valuable than writing new posts. For LLMO, you should be auditing and refreshing your top-performing category pages and blog posts at least once a quarter. This process ensures your vector space remains current and relevant. When you update, you should focus on factual accuracy regarding prices and SKU availability, converting standard text into conversational Q&A formats that mirror how people talk to chatbots, and ensuring your schema markup is valid so the model can parse your data instantly.

How LLMs see and surface your brand

Models do not see your website the way a browser does. They process it through vector embeddings – numerical representations of your content’s meaning. If you stop publishing or updating, your vector space becomes stagnant. Other brands that are more active will move closer to the user’s query in the model’s mathematical understanding. I have seen sites lose their AI citations almost overnight simply because a competitor published a more comprehensive, structured guide on the same topic.

In ecommerce, this is why I believe it is far more important to optimize category pages than product pages. A category page serves as a hub for the LLM to understand a whole range of products and entities, whereas a product page is often too narrow for broad conversational queries. By maintaining a high update frequency on these hub pages, you reinforce your authority across an entire product line.

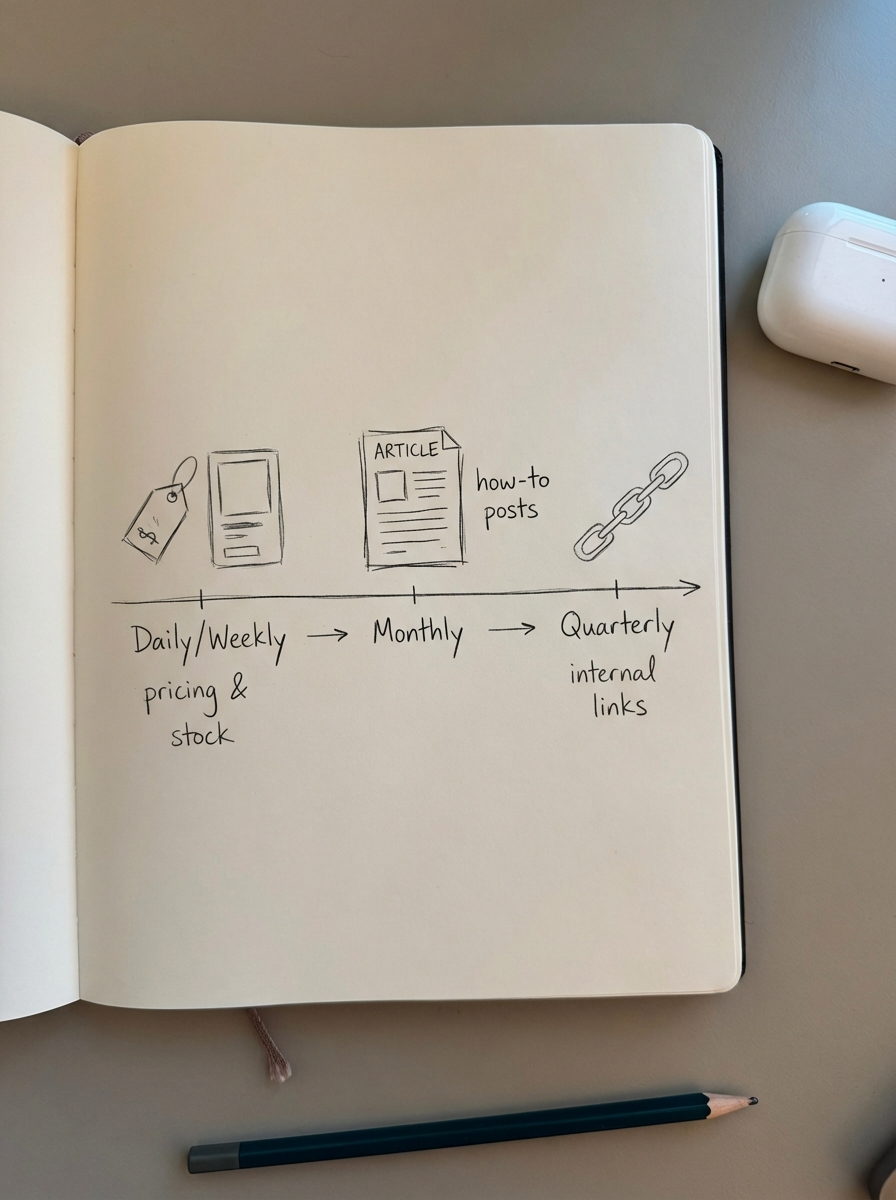

Strategic cadence for WooCommerce merchants

If you are managing a large catalog, manual updates are impossible. This is where automation becomes a necessity. A healthy cadence involves daily or weekly automated updates to product availability and pricing via your ecommerce SEO dashboard. This ensures that if a model retrieves your data, it is not suggesting an out-of-stock item or an incorrect price.

On a monthly basis, you should aim to add four to eight new how-to or comparison articles. We suggest using AI-driven content planning to identify the specific questions your customers are asking ChatGPT or Perplexity. Finally, on a quarterly basis, you must refresh your internal linking structure. LLMs use these links to understand the relationship between different entities on your site, and a stagnant link profile can signal content decay.

At ContentGecko, we automate this entire lifecycle. Our platform does not just write a blog post and leave it to rot; it syncs with your WooCommerce catalog to ensure every piece of content remains citation-worthy for the latest AI models. This prevents the “hallucination” risks associated with outdated product data.

Evidence of the impact

The shift from traditional search to generative discovery is measurable and accelerating. Research indicates that 58% of US consumers now rely on AI for product recommendations, and traditional search use is projected to decline by 25% by 2026. These are not just theoretical projections; they represent a fundamental change in how revenue is generated online.

I recently monitored a client who moved from a once-a-month manual publishing schedule to a twice-a-week automated cadence. Within 90 days, their brand mentions in Perplexity and ChatGPT search rose by 40%. The models started noticing them because the density of their topical coverage reached a threshold that the algorithms could no longer ignore. Consistency, more than sheer volume, is the signal that these models use to determine which brands are worth recommending to their users.

TL;DR

To stay visible in LLM search, you must shift from a static SEO mindset to a dynamic retrieval mindset. Publish new, cluster-based content three to five times weekly for growth, and refresh existing high-value pages quarterly to maintain factual accuracy for RAG systems. Use automation to keep your catalog data and internal links in sync, ensuring that when an AI model looks for a recommendation, your brand is the most current and authoritative option available.