LLM keyword research and content optimization

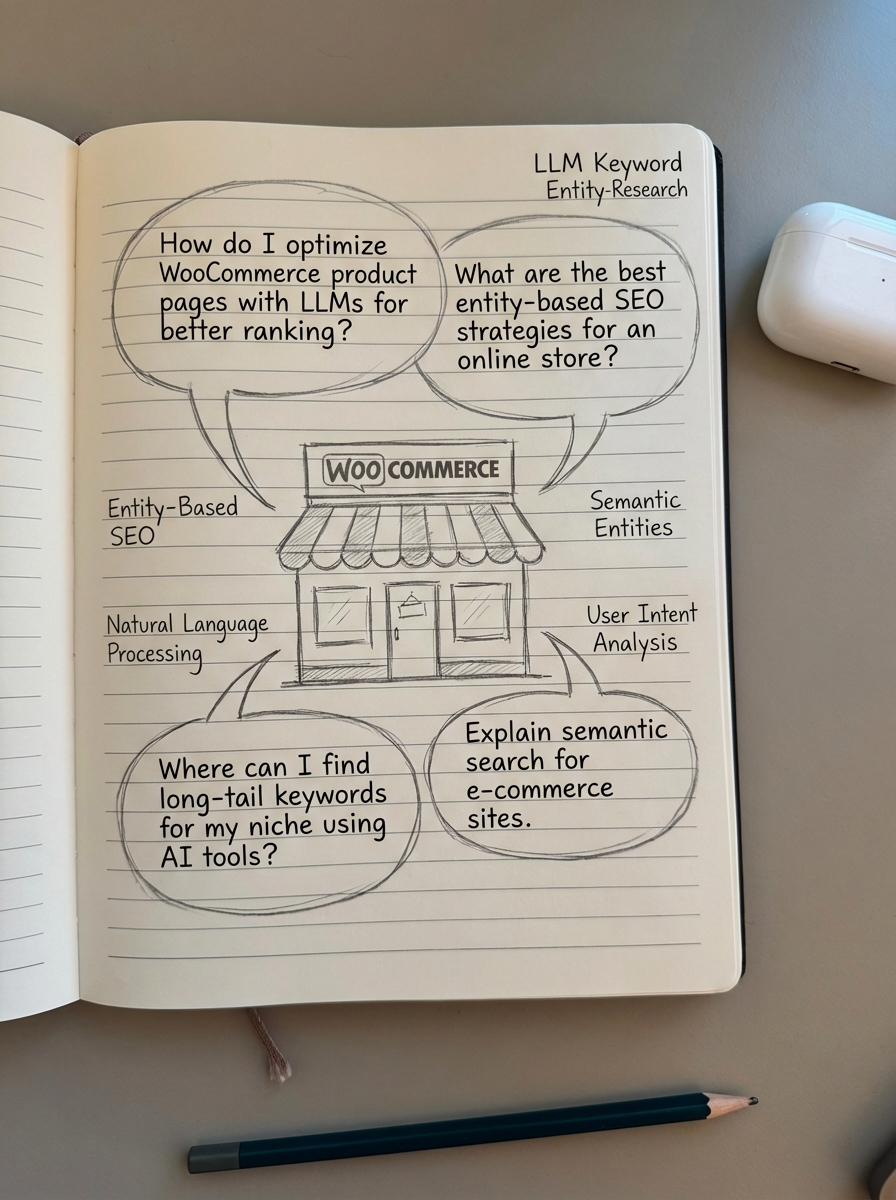

The era of targeting isolated keywords is dead; survival in the modern search landscape requires a pivot to conversational intent and entity-based relevance. For WooCommerce store owners and content leads, this shift represents a move away from static metrics and toward a strategy where Large Language Models (LLMs) like GPT-4, Gemini, and Perplexity reward topical depth over keyword density.

The limits of traditional keyword data in an AI world

I have spent years analyzing third-party keyword databases, and the reality is that most of that data is increasingly detached from current search behavior. We believe 3rd party keyword data is nearly useless for high-level strategy because the databases are too small to represent the nuances of natural language, and competition metrics are frequently off by a wide margin. Since the launch of ChatGPT, search term length has increased significantly, with 7-8 word keywords nearly doubling in frequency and siphoning volume away from shorter, generic terms.

Traditional tools fail to capture these long-tail, conversational queries because they rely on lagging indicators. In the fast-moving world of ecommerce, waiting for a legacy tool to detect volume for a new product trend means you have already missed the window of opportunity. Instead of chasing a single high-volume term based on inaccurate data, you should focus on entity-based keyword research strategies that establish your brand as a topical authority. AI has disrupted SEO, and while it reduces clicks to some domains, the ability to optimize cheaply and effectively currently outweighs the negatives for those who adapt quickly.

Using LLMs for intent-based keyword clustering

The most immediate win for any SEO lead is using LLMs to automate keyword clustering, transforming a manual process that once took dozens of hours into a task that takes minutes. I remember when our team would spend an entire weekend manually grouping keywords for a 5,000-SKU catalog. Today, LLM-powered workflows can process 1,000 keywords in about 3 minutes, allowing us to focus on strategy rather than spreadsheets.

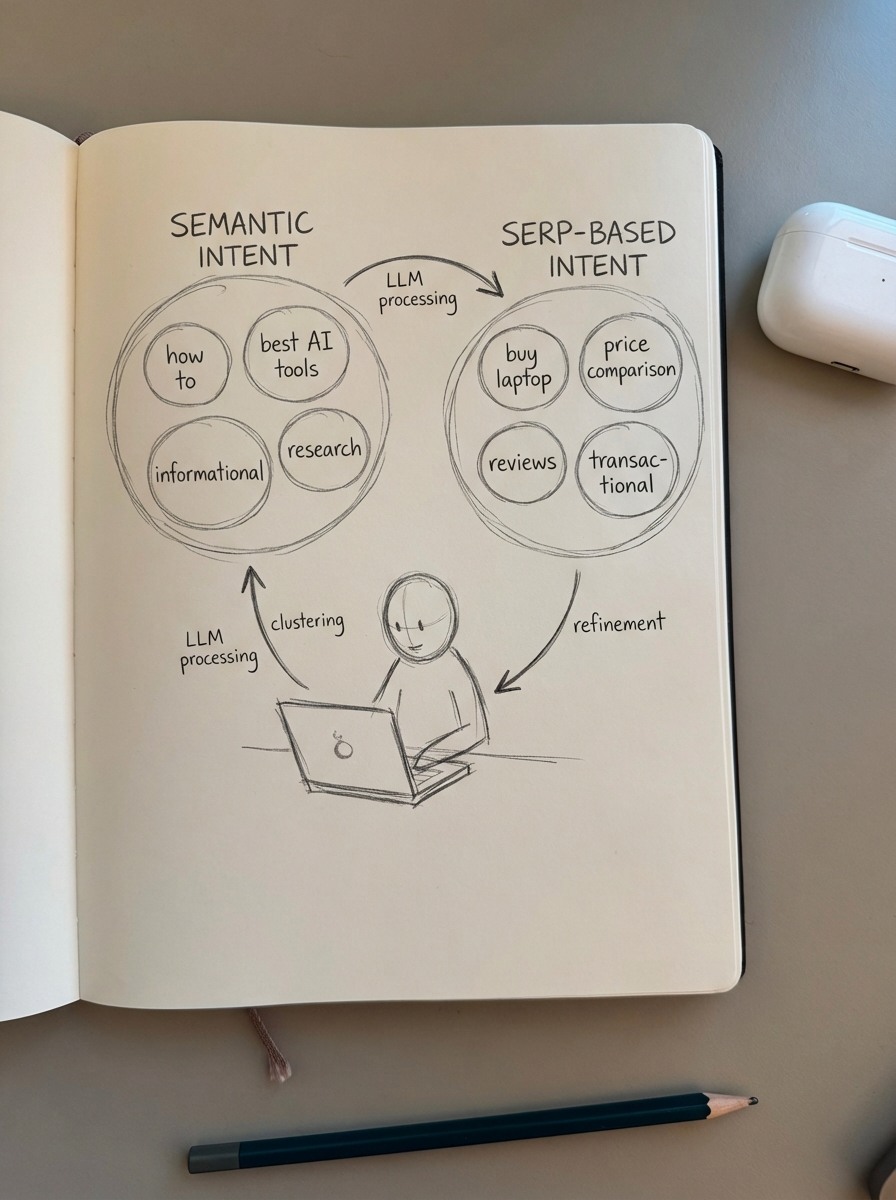

There are two primary ways to approach this:

- Semantic clustering groups keywords based on their underlying meaning using natural language processing. While this is fast and cost-effective, it can sometimes ignore how search engines actually interpret the results.

- SERP-based clustering serves as the gold standard for SEO by grouping keywords based on the actual overlap in search engine results pages.

I recommend a hybrid approach to maximize relevance. Use LLMs to identify the core entities – specific products, concepts, or brands – and then use a free SERP-keyword clustering tool to see how Google is actually grouping those intents. This ensures you are not creating redundant pages for different keywords that serve the same search intent, which prevents keyword cannibalization and preserves your crawl budget.

Optimizing content for LLM retrieval and citations

In the modern LLM-driven search landscape, the goal has shifted from simply ranking first to becoming the primary cited source in an AI overview. Google AI Overviews now appear on nearly one in three searches, and AI-driven retail traffic surged 1,300% during the 2024 holiday season. To get your WooCommerce store cited by these models, your content must be structured for high extractability.

I use a specific workflow to ensure content is “AI-ready” without sacrificing the user experience. We lead with the bottom line, placing the most critical information in the first paragraph to accommodate how LLMs “chunk” and process data. We also transition away from generic headings in favor of question-based H2s. Conversational queries achieve 40% higher CTR compared to traditional keyword targeting. Instead of using a heading like “Boot Maintenance,” we use “How do I clean salt stains off leather boots?” to match the natural language patterns users use with AI assistants.

Technical foundations remain equally important. Implementing comprehensive schema markup is non-negotiable, yet only about 6% of page-one results currently use it correctly. This provides a massive opportunity for early adopters to help LLMs understand the relationship between their data points. Furthermore, ChatGPT and Perplexity frequently cite comparison tables because they provide high-density, factual information that is easy for the model to synthesize.

How ContentGecko bridges the gap for WooCommerce

For many merchants, the challenge is not understanding the strategy, but finding the time to execute it across thousands of products. This is where large language model optimization (LLMO) becomes a scale problem that humans cannot solve manually. If you think an article would benefit your users, you should publish it regardless of what legacy keyword research says, but doing this at scale requires automation.

We built ContentGecko to automate this entire cycle by syncing directly with your WooCommerce catalog. This ensures that every buyer’s guide or how-to post remains product-aware; when prices change or items go out of stock, the content updates automatically. This level of data freshness is a critical signal for Retrieval-Augmented Generation (RAG) systems, which LLMs use to provide real-time answers. If you are looking to scale your content without a massive team, our free AI SEO content writer can help you build authoritative briefs based on live SERP research and current facts.

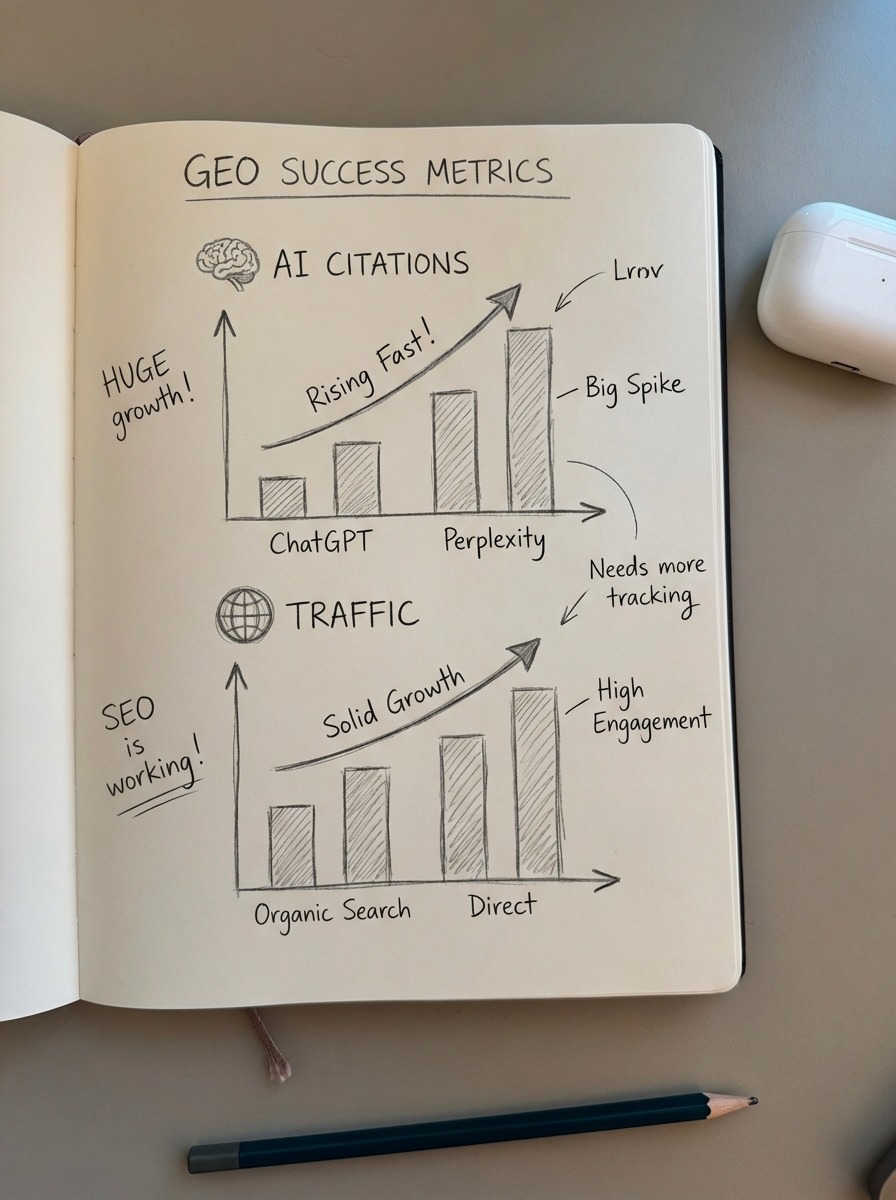

Measuring success in the era of GEO

Traditional metrics like keyword position are becoming less reliable as search results become increasingly personalized and mediated by AI. In the world of Generative Engine Optimization (GEO), you must track new performance indicators to understand your true visibility.

- AI Citation Frequency: Monitor how often your brand or specific product pages are mentioned in ChatGPT or Perplexity responses.

- Referral Traffic from AI Engines: Track traffic from sources like

openai.comorperplexity.aiin your analytics dashboard. - Dwell Time: LLM searchers often spend more time on a page – averaging six minutes versus two minutes in traditional search – as they look for deep confirmation of an AI’s initial summary.

The future of search is conversational. The companies that win will be those that stop trying to game the algorithm and start providing the most authoritative, well-structured, and catalog-synced answers to their customers’ questions.

TL;DR

- Focus on long-tail conversational queries (7-8 words) as they are the fastest-growing segment of search volume.

- Use SERP-based clustering to group keywords by intent, which saves dozens of hours of manual work and prevents content cannibalization.

- Optimize for extractability by using question-based headings and comparison tables to increase the likelihood of being cited by AI models.

- Leverage catalog integration to keep your content synced with your WooCommerce store, satisfying the freshness requirements of RAG systems.

- Transition from tracking raw rankings to monitoring AI citations and referral traffic from generative engines.