WooCommerce A/B testing for SEO and conversions

A/B testing on WooCommerce reveals which product page elements, checkout flows, and SEO tweaks actually drive conversions. Most merchants guess at optimization – running systematic tests removes the guesswork and compounds incremental gains into measurable revenue.

The challenge isn’t just testing button colors. You’re testing structured data variations, meta description CTR impact, and product page SEO elements that affect both search visibility and on-page conversions simultaneously.

Why A/B testing matters for WooCommerce stores

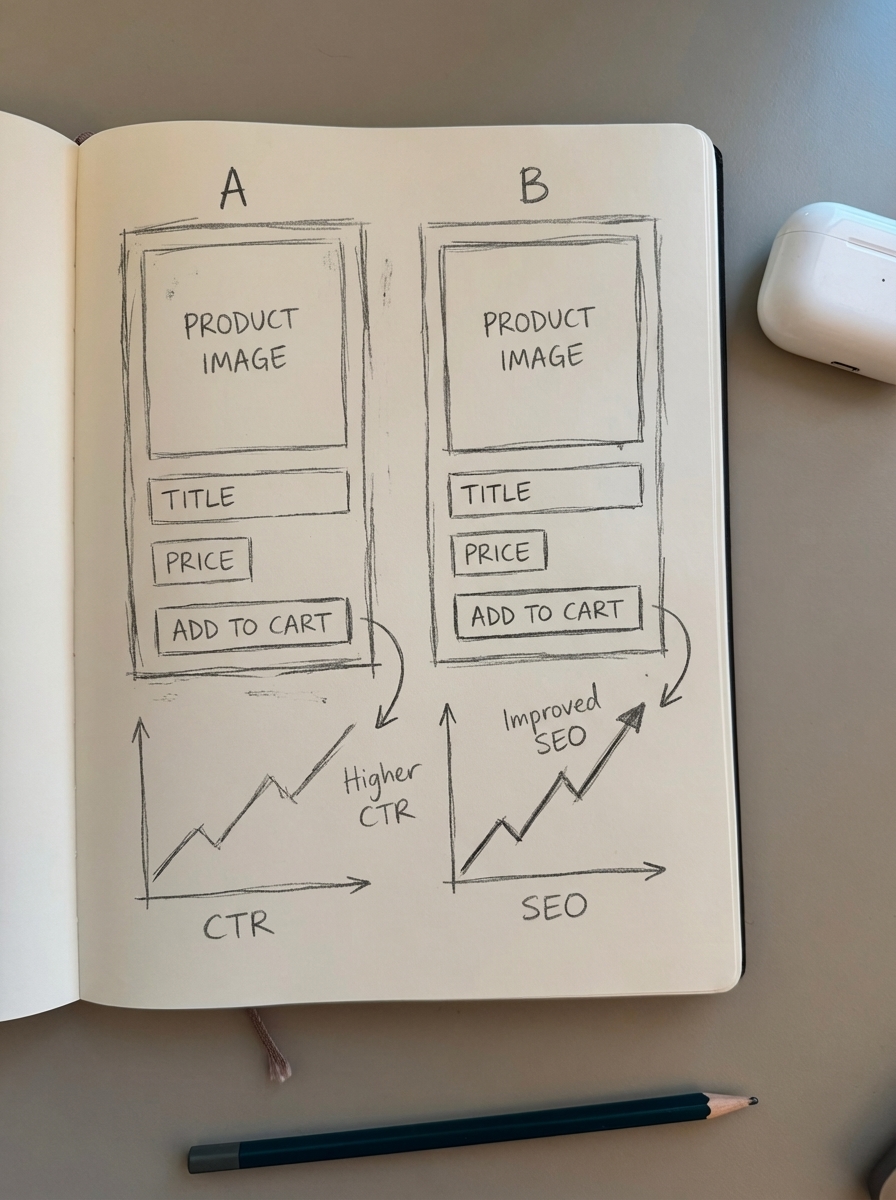

Standard conversion optimization focuses on user experience. SEO A/B testing adds a layer: testing changes that affect search engine visibility while measuring conversion impact.

Product detail page testing accounts for 38% of all e-commerce A/B tests. Sticky add-to-cart buttons appear in 73% of mobile optimization projects, and product information hierarchy modifications happen in 61% of PDP tests.

The ROI is concrete. Zalora increased checkout rate by 12.3% through uniform CTA button optimization. ShopClues boosted visits-to-order by 26% with homepage navigation changes. Intertop achieved a 54.68% increase by optimizing checkout form fields and autofill options.

For SEO specifically, title and description testing improves CTR by 5.7% on average when optimized for position-specific expectations. Every 100ms improvement in site speed correlates with a 1.3% increase in conversion rate for US ecommerce sites.

I’ve seen merchants dismiss A/B testing as “too technical” or “too time-consuming.” Then they redesign their entire product catalog based on a hunch and wonder why conversions dropped. Testing isn’t optional if you want predictable growth – it’s the difference between informed decisions and expensive guesses.

What to A/B test in WooCommerce

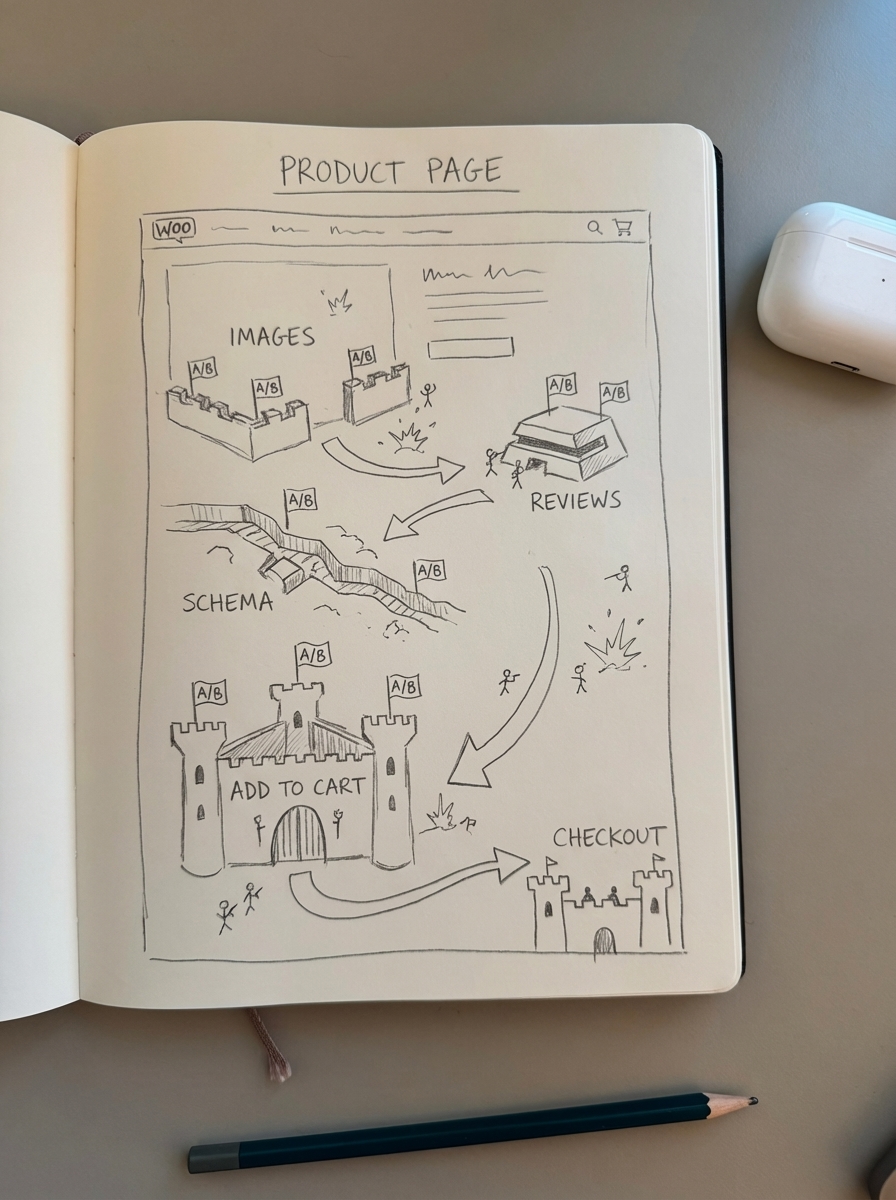

Product page elements

Product pages are your conversion battleground. Test systematically, not randomly.

Images and media: AdonisClothing achieved a 33% increase testing product images – models versus plain backgrounds, lifestyle versus studio shots. For WooCommerce, this means testing gallery layouts, zoom functionality, and video placement. Optimized image SEO with proper alt text and filenames affects both search visibility and conversion.

Product information hierarchy: Test whether technical specs appear above or below the fold, whether reviews show first or product details, and how you present variant options. SmartWool saw a 17.1% revenue increase from page layout testing comparing uniform versus mixed designs.

The biggest mistake? Testing everything at once. You’ll never know which change drove the result. Test one element per experiment, or use multivariate testing only if you have massive traffic.

Schema and structured data: This is where SEO and conversion testing intersect. Test product schema variations – does including aggregate ratings in schema improve CTR from search? Does adding offers schema with shipping details reduce bounce rate? Missing or invalid schema reduces rich result eligibility by 32%.

Reviews and social proof: Reviews increase conversion by 18% on average across product page experiments. Test review placement, star rating display, review filtering options, and review schema implementation. One pattern I’ve noticed: stores that place reviews above product descriptions see higher engagement but slightly lower add-to-cart rates. Stores that place them below see the opposite. Test for your specific audience.

Checkout optimization

Intertop’s 54.68% increase came from checkout process optimization. For WooCommerce stores, focus on form field reduction (test removing optional fields), autofill implementation and accuracy, guest checkout versus forced account creation, payment method display and ordering, trust badges and security indicators placement, and progress indicators and step labeling.

One critical detail: over 60% of ecommerce shoppers use mobile devices. Test mobile-specific checkout flows separately from desktop. What works on a 27-inch monitor often breaks on a 6-inch screen.

SEO-specific tests

These tests affect search visibility first, conversions second.

Meta titles and descriptions: Test different value propositions, keyword placement, and character lengths. Pages ranking #3 with less than 2% CTR signal immediate optimization needs – the top organic result achieves 39.8% CTR in 2025, with CTR declining exponentially for lower positions.

URL structure: While you shouldn’t frequently change URLs, when you do restructure, test the impact. Does including category in product URLs improve click-through from search? WooCommerce URL structure affects both SEO and user understanding of site hierarchy.

Breadcrumb implementation: Test breadcrumb placement and schema. Stores with proper breadcrumbs often see 5-15% improvement in organic click-through rates. I tested breadcrumb visibility on a client’s store – above the H1 versus below – and the above-H1 placement improved CTR from category pages by 8% while hurting conversion by 3%. Trade-offs exist; data reveals them.

Canonical tag strategies: For products appearing in multiple categories or with variant URLs, test different canonical tag approaches and measure both ranking consolidation and conversion rate by landing page type.

Tools for WooCommerce A/B testing

Native WooCommerce and WordPress plugins

Google Optimize (now Optimize 360 in Analytics): Free tier, integrates with Analytics, limited to 5 simultaneous tests. Setup requires adding a container snippet to your theme’s header.

Nelio A/B Testing for WordPress: WooCommerce-specific plugin testing product pages, checkout flows, and WooCommerce-specific elements. Pricing starts around $29/month. Advantage: understands WooCommerce data structure natively, so you’re not fighting platform limitations.

Convert: Third-party JavaScript solution that works with any platform. Pros: advanced targeting and personalization. Cons: can impact page speed if not optimized, requires JavaScript expertise for complex tests.

Simple Page Tester: Lightweight WordPress plugin for basic split testing. Free, but limited to URL-based splits rather than element-level changes.

The plugin approach works well for stores under 5,000 products and standard tests. Self-service A/B testing platforms are suitable for 70% of standard tests.

Enterprise platforms

Optimizely: Industry standard for large-scale testing. Full-stack testing, advanced targeting, built-in stats engine. Pricing typically starts at $50k+/year. Required for tests expecting less than 5% improvement – their Stats Engine handles the power analysis properly.

VWO: Mid-market solution with heatmaps, session recordings, and A/B testing combined. Better value than Optimizely for stores doing $1M-$10M annually.

AB Tasty: Similar to VWO, strong personalization features. European data hosting option for GDPR compliance.

Custom implementations are required for 30% of advanced personalization needs. If you’re testing dynamic pricing, complex product recommendation algorithms, or server-side rendering optimizations, you need an enterprise platform or custom build.

Server-side versus client-side testing

Client-side (JavaScript) testing is easier to implement but creates a “flash” as the page loads then transforms. It also doesn’t help with SEO tests – Google sees the original version before JavaScript executes.

Server-side testing requires more technical setup (you’re literally serving different HTML to different users) but eliminates flash, works better for SEO tests, and improves Core Web Vitals. Core Web Vitals fixes reduce bounce rates by up to 15% according to Google’s internal studies.

For WooCommerce stores on managed hosts (WP Engine, Kinsta, Pagely), check if they offer built-in A/B testing infrastructure – some hosts provide server-side splitting at the infrastructure layer.

Running SEO-focused A/B tests

SEO tests differ from standard conversion tests in two ways: sample size requirements and measurement timeframe.

Statistical rigor for SEO tests

Standard conversion tests need 95% statistical confidence minimum for major changes and 90% for minor optimizations. SEO tests need longer duration because search engines need time to recrawl and reindex, rankings fluctuate seasonally and weekly, and CTR changes compound over weeks as position stabilizes.

Typical timeline: 4-6 weeks minimum for title and description tests, 8-12 weeks for structural changes like URL modifications or breadcrumb implementation.

I once declared a title tag test winner after two weeks. Rankings shifted in week three. The “loser” ended up winning after eight weeks when seasonality normalized. Patience pays.

Tracking SEO test results

Use Google Search Console to track impressions and CTR by page, average position trends, query-level performance changes, and rich result appearance rate.

Set up custom dashboards in Analytics tracking organic landing pages separately from paid traffic. The ecommerce SEO dashboard displays all standard Google Search Console metrics but breaks them down by page type – categories, products, and blog posts separately – so you can see if your product page tests affect category page performance.

A/B testing tracks changes in keyword performance following updates to content length, enhanced formatting, and multimedia inclusion.

Common pitfalls in SEO A/B testing

Cloaking violations: Serving different content to Googlebot than to users violates Google’s guidelines. Your A/B test must serve variants to both search engines and users randomly – don’t detect Googlebot and serve a specific version.

Canonical confusion: If you’re testing URL variants, implement proper canonical tags to tell search engines these are temporary test variants, not duplicate content.

Insufficient traffic: Small stores with fewer than 1,000 organic sessions monthly won’t get reliable results in reasonable timeframes. Focus on the highest-traffic category or product pages first, or test multiple similar pages together.

Premature conclusions: I’ve seen merchants declare winners after one week because Version B got more sales. Rankings shifted back in week two, Version A ultimately won after six weeks. Be patient or waste budget.

Best practices for WooCommerce A/B testing

Start with high-impact, low-risk tests

Test elements that won’t break checkout or create duplicate content issues: meta descriptions and title variations, button colors and CTA text, review placement and display format, image gallery layouts, and trust badge positioning.

Avoid testing fundamental changes – removing product descriptions, hiding pricing, restructuring checkout – until you have baseline data from safer tests.

Segment by device and traffic source

Mobile shoppers behave differently than desktop users. Organic traffic converts differently than PPC traffic. Test and analyze separately.

Clear Within saw an 80% improvement from above-the-fold element placement optimization specifically for mobile users – that same change hurt desktop conversion. Aggregated data would have shown a modest win and hidden the real story.

Document and share learnings

Create a shared spreadsheet tracking test hypothesis, variant details, start and end dates, statistical confidence achieved, winner and lift percentage, and implementation notes.

We found that our best-converting product image style (lifestyle shots with context) performed 18% better than studio shots across three separate tests spanning eight months. Document patterns or you’ll retest the same hypotheses forever.

Integrate with your content strategy

If you’re using automated content for WooCommerce SEO, coordinate your A/B tests with content updates. Test new schema implementations when publishing related blog content. Test product page layouts when launching new product lines.

Marketing teams implementing optimized approaches report 73% reduction in content workflow manual labor. Automation reduces the manual overhead of implementing test winners across your catalog.

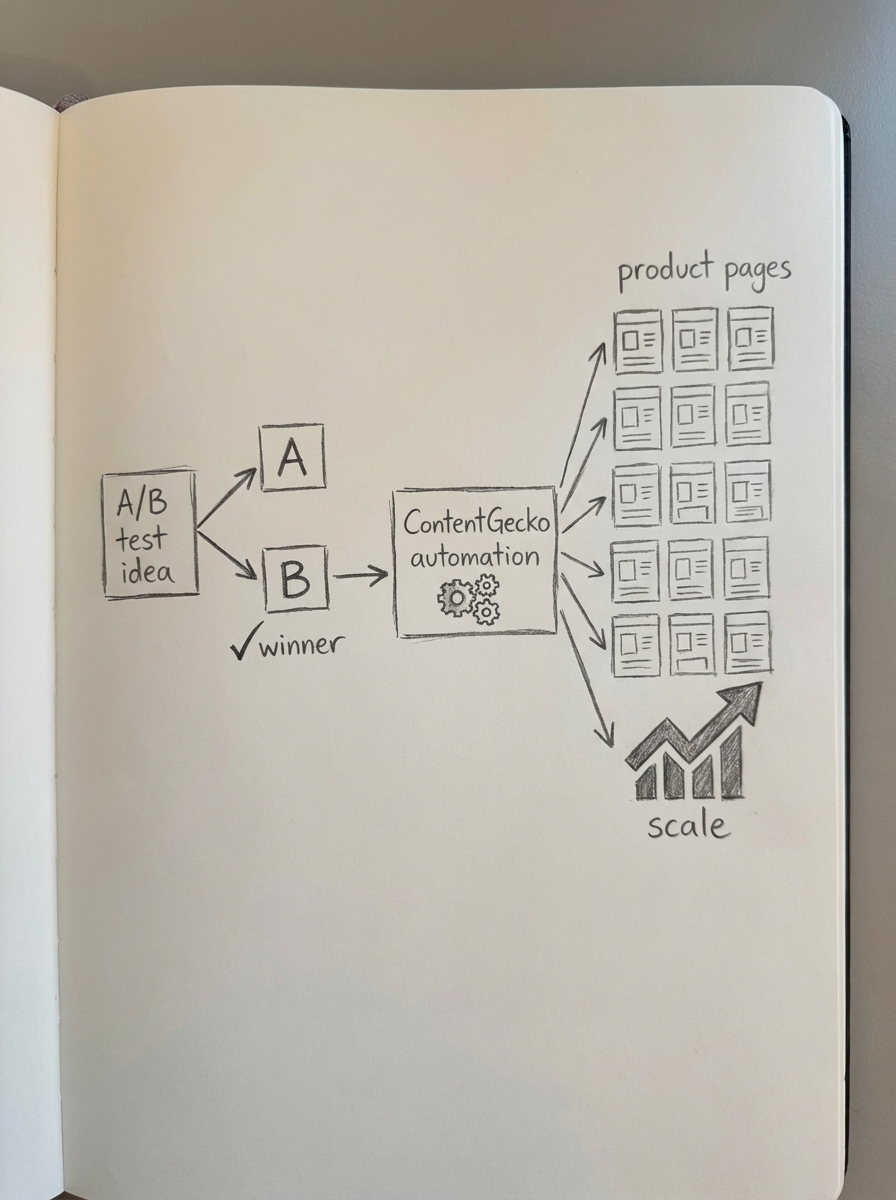

When to use ContentGecko for A/B testing workflows

ContentGecko doesn’t run A/B tests directly, but it automates the implementation of test winners at scale.

Automated schema testing implementation

You test whether adding FAQ schema to product pages improves CTR and featured snippet appearance. Winner confirmed after 6 weeks. Now you need to implement FAQ schema across 3,000 products manually – or let automation handle it.

ContentGecko’s catalog-synced content approach automatically generates and maintains proper structured data across your entire product catalog. When you identify winning schema patterns through testing, ContentGecko scales the implementation automatically.

Testing content format variations

Test different product description formats: bullet points versus paragraphs, technical specs first versus benefits first, short descriptions versus long-form content.

Once you identify winners, automated content generation can apply the winning format across your catalog while maintaining unique, non-duplicate descriptions for each product. Companies saw a 45% increase in organic traffic within six months while reducing time spent on content activities by 87%.

Category page optimization at scale

You test category page title formats and meta descriptions to improve CTR. Version B wins: “Buy [Brand] [Product Type] | [Key Benefit] | [Store Name]” outperforms generic titles by 12% CTR improvement.

The category optimizer can analyze and suggest optimized category titles using the winning pattern you identified, then implement across your entire category structure without manual labor.

Ongoing test winner maintenance

The hardest part of A/B testing isn’t running tests – it’s maintaining test winners as your catalog changes. Product prices update, inventory changes, new products launch, old products discontinue.

ContentGecko monitors and updates when SKUs, prices, stock, or URLs change. Test winners stay implemented without manual maintenance. One client achieved 224% traffic growth in four months through optimization that included automated maintenance of winning patterns.

Measuring A/B test ROI

Track both immediate conversion impact and longer-term SEO effects.

Immediate metrics: Conversion rate by variant, revenue per visitor, add-to-cart rate, checkout abandonment rate, and bounce rate by landing page.

SEO metrics (4-12 week lag): Organic CTR from search, average position for target keywords, rich result appearance rate, impressions growth for product pages, and organic revenue attributed to tested pages.

Use the ecommerce SEO dashboard to track Potential Additional Clicks – the estimated additional clicks you’d get if your domain ranked in first position for all keywords you currently get impressions with. As you implement test winners that improve CTR and rankings, watch this metric to quantify untapped opportunity.

A realistic expectation: well-executed A/B testing programs deliver 10-20% annual conversion rate improvement and 15-30% organic traffic growth over 12 months. Individual tests might show 3-8% lift, but compounding multiple winners creates substantial impact.

Tools and resources

Free tools: ContentGecko keyword clustering for identifying cannibalization issues to test, free category optimizer for category page testing ideas, Google Search Console for CTR and impression tracking, and Google Analytics for conversion tracking.

Paid solutions for different store sizes:

Starter (up to 1,000 products): Nelio A/B Testing plus Rank Math for schema. Budget: around $50/month.

Professional (1,000-10,000 products): VWO or Convert plus ContentGecko Professional for automated implementation. Budget: $200-500/month.

Enterprise (10,000+ products): Optimizely plus ContentGecko Enterprise with dedicated Slack support for coordinating test rollouts across massive catalogs. Budget: $5k+/month.

Common objections

“My store is too small for A/B testing”: You need around 1,000 sessions per variant per week minimum for reliable conversion tests. For SEO tests on high-traffic landing pages, you can test with less traffic but longer duration. SimCity experienced a 43% increase from homepage design simplification – that’s a single high-traffic page test suitable for smaller stores.

“Testing slows down implementation”: Testing validates implementation. Rolling out untested changes across 5,000 products is slower than testing on 50 products first, finding the winner, then scaling. One merchant told me they reverted three months of product page redesign work after finally running a proper test – Version A (original) won. Testing saved them from permanent damage.

“SEO tests take too long”: Yes, 6-12 weeks feels slow compared to conversion tests. But SEO improvements compound over years. A 5% CTR improvement from better meta descriptions generates incremental traffic indefinitely. The long test duration has long-term ROI.

TL;DR

WooCommerce A/B testing splits into two categories: conversion optimization (testing checkout flows, product page layouts, CTAs) and SEO optimization (testing titles, descriptions, schema, and structural elements).

Use plugins like Nelio for WooCommerce-specific tests under 5,000 products. Use enterprise platforms (VWO, Optimizely) for advanced personalization and large catalogs. Run SEO tests server-side when possible to avoid flash and ensure search engines see test variants.

Test high-traffic product pages and categories first – small stores should focus on their top 20% of landing pages. Aim for 95% statistical confidence on major changes and 90% on minor tweaks. SEO tests need 6-12 weeks minimum duration.

ContentGecko automates the implementation of test winners across your entire catalog – catalog-synced content, automated schema, and ongoing updates when products change. Most valuable for Professional (1,000-10,000 products) and Enterprise (10,000+) stores where manual implementation of test winners becomes a bottleneck.

Start with three tests: product image layouts, meta description formats, and review placement. Measure both conversion lift and organic CTR improvement. Compound small wins – 10-20% annual conversion improvement and 15-30% organic traffic growth are realistic targets.