LLM search adoption for ecommerce SEO

LLM-based search is no longer a futuristic concept; it is already siphoning significant traffic from traditional search engines, and merchants who ignore this shift will find themselves digitally invisible by 2028.

We are currently witnessing a massive transformation in user behavior. Projections suggest that organic search traffic to websites will drop by 50% or more by 2028 as consumers embrace generative AI search. While some practitioners are panicking about “zero-click” searches, I view this as a significant opportunity for WooCommerce stores that can feed these models the right data. In my experience, the merchants who win this transition aren’t the ones trying to trick the AI, but those who structure their catalog and content to be the most reliable source of truth for the model.

The rapid adoption of LLM search in ecommerce

Adoption is not just happening on ChatGPT; it is occurring inside the search engines themselves through LLM search features like Google’s AI Overviews and Amazon’s Rufus. Industry data suggests that LLM traffic share will grow from 4% in 2025 to a staggering 75% by 2028. Retail sites already saw a 1,300% spike in AI search referrals during the 2024 holiday season, proving that the shift is already in motion.

A common objection I hear from marketing directors is that AI-referred traffic is lower quality because the user does not always click through. The data tells a different story. Visitors coming from LLM search are 4.4x more likely to convert than traditional organic search visitors.

This happens because the LLM performs the heavy lifting of filtering before the user ever lands on your site. If a user asks an AI for the best waterproof hiking boots for wide feet and it cites your store, the visitor landing on your page has already been qualified by the model’s reasoning. They aren’t just browsing; they are arriving with high intent.

Benefits and implementation tradeoffs

Adopting an LLM-first search strategy involves more than just writing conversational content. It requires a fundamental shift in how you handle data architecture and information retrieval.

The conversion advantage

During the 2025 Cyber 5, AI-referred shoppers landed on product detail pages (PDPs) at a 77% rate, compared to just 60% from traditional Google search. This indicates that LLMs are better at deep-linking users to the exact SKU they need. I focus heavily on Large Language Model Optimization because it drastically shortens the path to purchase by bypassing generic category pages when a specific intent is identified.

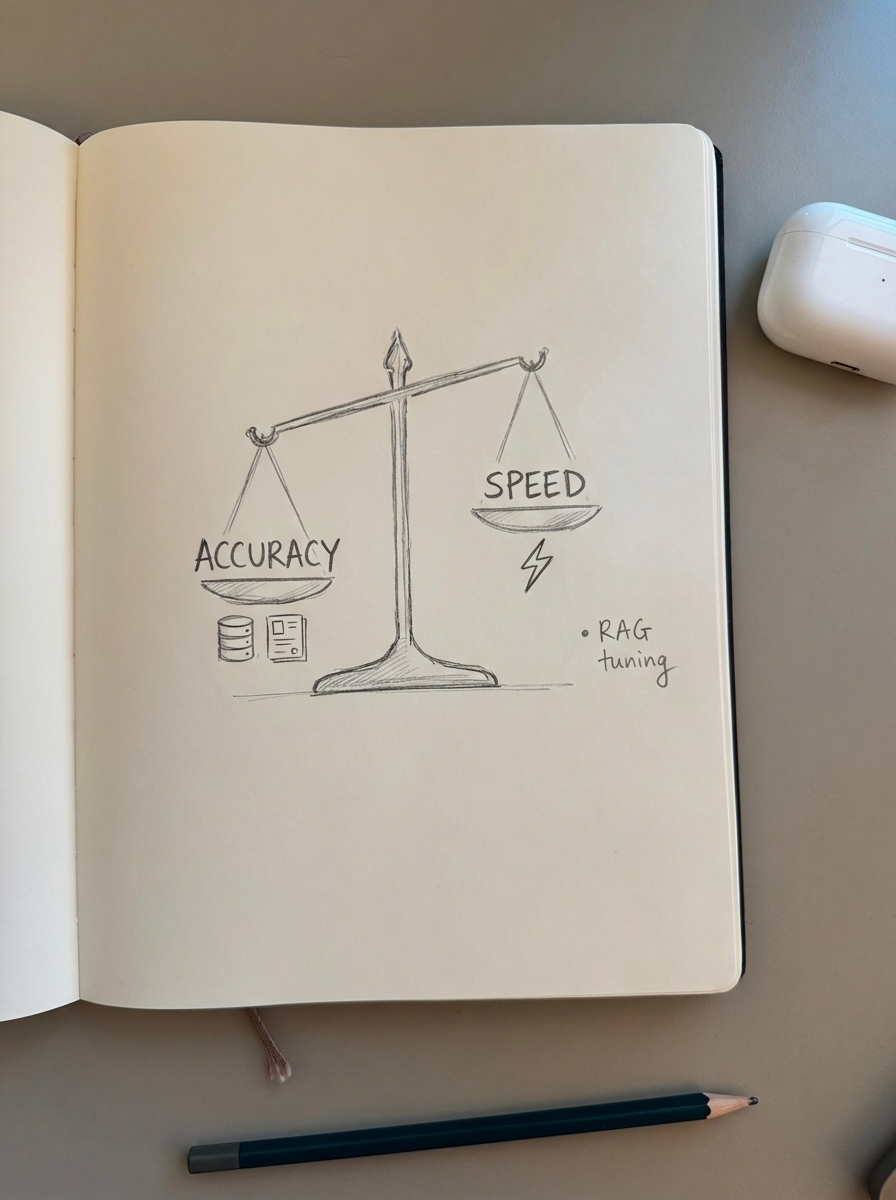

Technical tradeoffs: Accuracy vs. Speed

When implementing LLM-based search on-site to replace default WooCommerce search functionality, you face a distinct “hallucination” risk. If your retrieval-augmented generation (RAG) prompts lack context, the AI might suggest products that are out of stock or even non-existent.

This is where structuring data for LLM retrieval becomes critical for maintaining brand trust. You have to balance several factors:

- Vector search depth: This determines how much of your product catalog the AI can effectively remember and retrieve.

- Latency: Site visitors will not wait ten seconds for an AI to think about their query; you must optimize for near-instant responses.

- Cost: Running high-token queries for every site search can erode margins quickly if not managed through model pruning or quantization.

Rollout best practices for WooCommerce merchants

If you are looking to optimize for this new era, you should not abandon your technical SEO checklist. In fact, the first step in optimizing for AI search engines is getting traditional SEO fundamentals right. AI models prioritize content relevance and quality over page load speeds, but they still require a clean technical foundation to crawl your site effectively.

Implement robust schema markup

LLMs are essentially sophisticated pattern matchers. If you do not have comprehensive structured schema markup, the model has to guess your product’s attributes. I have seen stores achieve a significant increase in AI assistant citations simply by cleaning up their FAQ and Product schema. Properly implemented JSON-LD provides the explicit signals LLMs need to parse and feature your content as an authoritative answer.

Move from keywords to intent clusters

I recommend you stop thinking about generic keywords and start focusing on the intent behind the query. Use tools for SERP-based keyword clustering to group queries that serve the same user purpose. LLMs favor content that demonstrates topical authority through clusters rather than isolated, thin pages. By grouping semantically related terms, you avoid internal cannibalization and provide the “synthesizable” content chunks that AI models prefer.

Execute a dual-structure content strategy

Your pages must now serve two masters: the human reader and the AI crawler. For the AI, you need clear, machine-readable structures and effective metadata strategies including content type classifications and source identifiers. For the human, you must provide expert insights that AI cannot easily replicate.

When comparing traditional SEO vs. LLMO techniques, it becomes clear that the best content is a hybrid. You should use natural language questions as headers followed by immediate, direct answers to increase your chances of being cited in AI-generated responses.

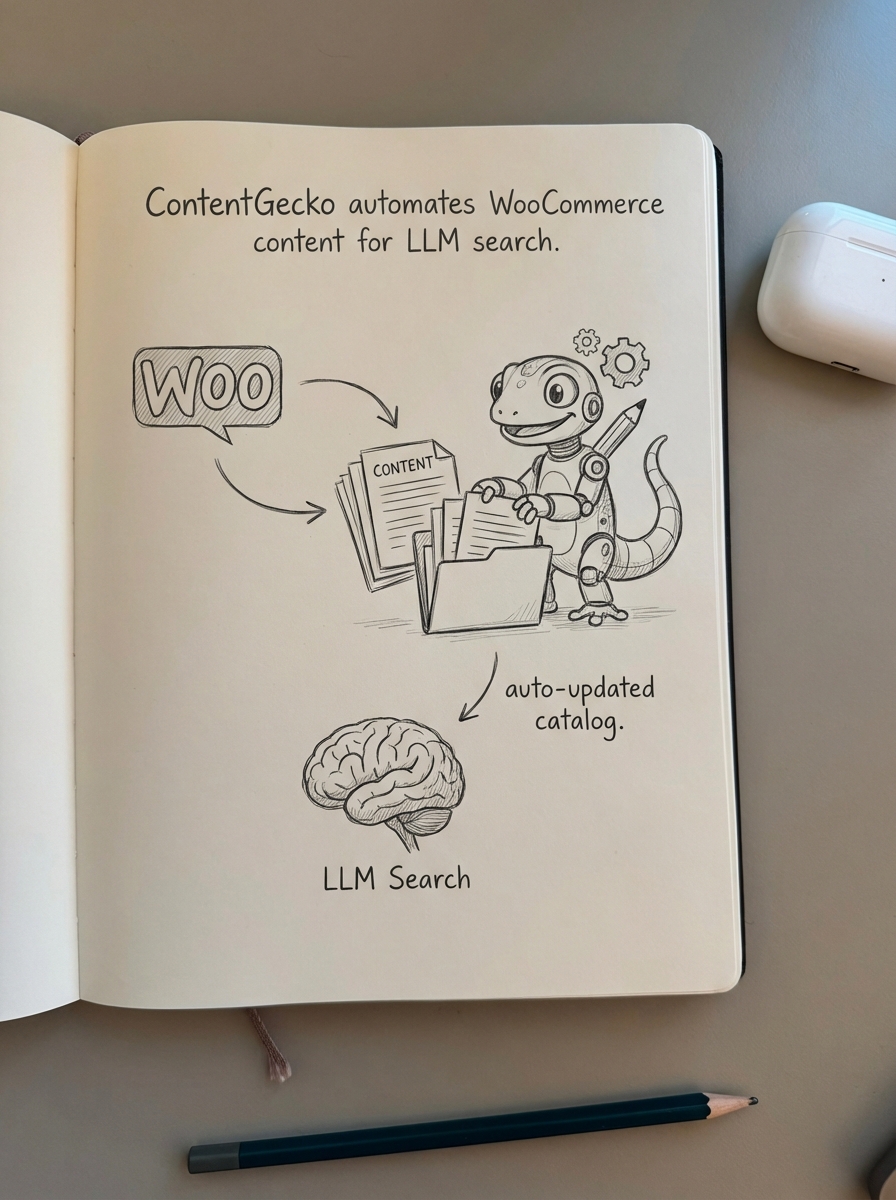

How ContentGecko handles LLM search adoption

For many WooCommerce merchants, keeping up with these content format changes is a massive manual burden. This is why we built ContentGecko to be catalog-aware. Our platform does not just write generic blog posts; it syncs directly with your WooCommerce inventory via our WordPress connector plugin.

When your prices change or SKUs go out of stock, your blog content updates automatically. This ensures that when an LLM crawls your site to provide a recommendation, it receives the most accurate, real-time data possible. By automating the ROI of LLM optimization, we allow merchants to scale their content production without the risk of spreading misinformation or technical debt.

I suggest tracking this performance directly in our ecommerce SEO dashboard. It allows you to separate blog performance from category and product pages, giving you a clear view of how your conversational content is driving AI citations and, ultimately, revenue.

TL;DR

LLM search adoption is accelerating, with projections showing it will dominate search-driven revenue by 2028. For ecommerce merchants, the benefits are clear: higher conversion rates and better-qualified traffic that lands directly on product pages. To win in this landscape, you must prioritize structured schema, adopt intent-based content clustering, and ensure your product catalog is perfectly synced with your content strategy. ContentGecko automates this entire pipeline, turning your WooCommerce store into an authoritative source for both Google and the next generation of AI search engines.