LLMO for ecommerce SEO

Large Language Model Optimization (LLMO) is not about gaming a new algorithm; it’s about making your store’s data digestible for models that synthesize information rather than just index links. To win in the era of Perplexity, ChatGPT search, and Google’s AI Overviews, your ecommerce site must move beyond static keywords and toward a catalog-aware content ecosystem.

How LLMO actually works for ecommerce

Traditional SEO focuses on helping a crawler index a page so it can appear in a list of results. LLMO – also known as Generative Engine Optimization (GEO) – focuses on how an AI model retrieves, understands, and cites your content to answer a direct query. For a WooCommerce store, this means the LLM isn’t just looking for “best hiking boots.” It’s looking for a source it can trust to explain why a specific pair of boots in your catalog is better for wide feet or wet terrain.

I have observed that LLM-based search and its impact fundamentally change the user journey. Instead of clicking three different links to compare products, users ask the AI to do the comparison for them. If your data isn’t structured for retrieval, your products simply won’t be part of that conversational output. The shift is moving from transactional link-clicking to context-aware dialogue.

Debunking common LLMO myths

There is a significant amount of “AI magic” fluff in the market right now that obscures the technical reality of these models. To build a sustainable strategy, we need to clarify what is actually happening under the hood and move past the hype.

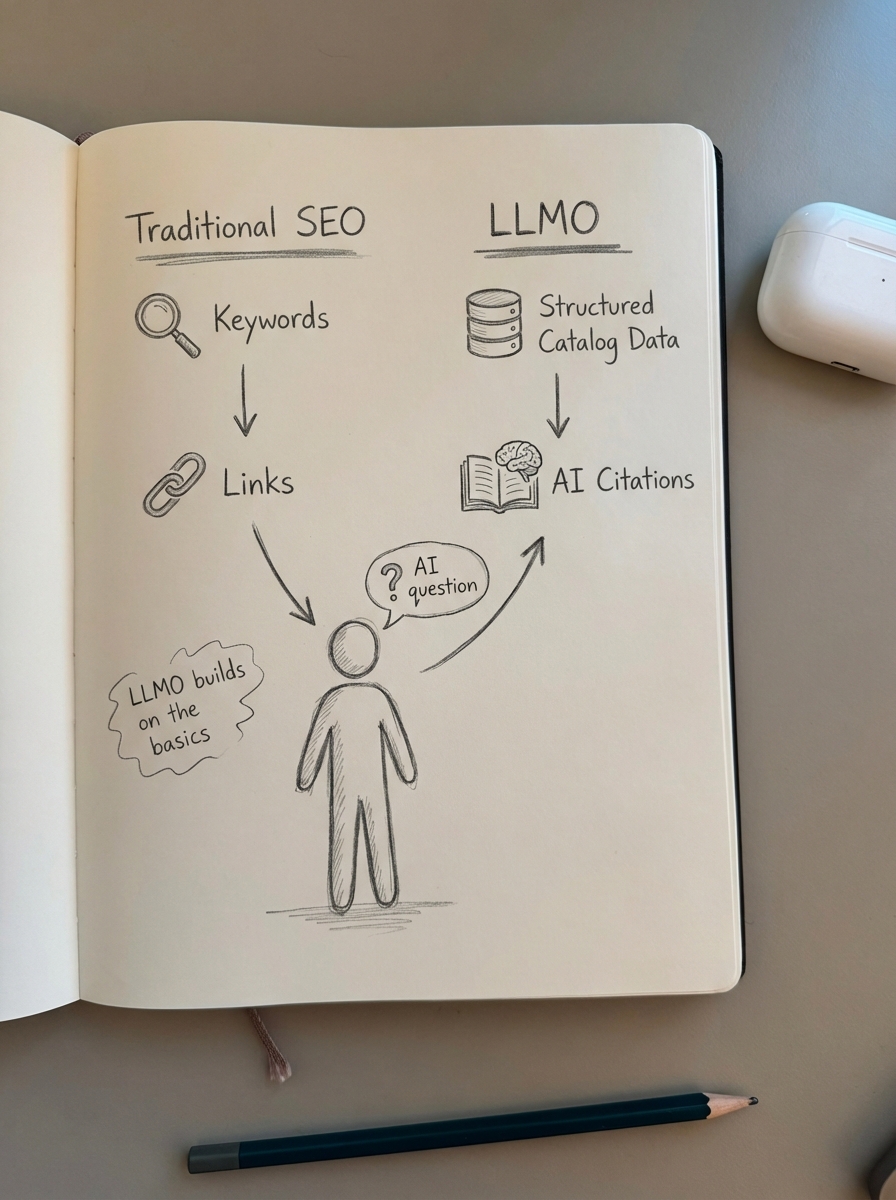

Traditional SEO is being replaced

The reality is that traditional SEO is the foundation for LLMO. You cannot optimize for an AI model if your technical basics – like site speed, mobile responsiveness, and clean URL structures – are broken. If a bot cannot crawl your site efficiently, an LLM cannot cite you as a source. AI search engines still rely on the indexability and authority of your domain to verify the facts they present to users.

Generic AI-generated content ranks well

I’ve seen too many merchants assume they can prompt a generic model to “write 50 product descriptions” and consider the job done. Generic models often produce thin content that lacks domain expertise and fails to provide “information gain” – the practice of providing new insights that aren’t already present in the top search results. Research into LLM misconceptions and limitations shows that generic models have low accuracy in specialized domains because they lack context. To rank, your content must offer unique value that a generic prompt cannot replicate.

LLM hallucinations are unavoidable in ecommerce

In ecommerce, a hallucination is a disaster – such as an AI claiming a product is in stock when it isn’t, or quoting an outdated price. These errors usually occur because the prompt or the model lacks real-time context. When you use a catalog-synced approach for WooCommerce SEO, you provide the LLM with real-time data including SKUs, pricing, and stock status. This method, known as Retrieval-Augmented Generation (RAG), ensures the output is grounded in your actual, live inventory rather than training data from two years ago.

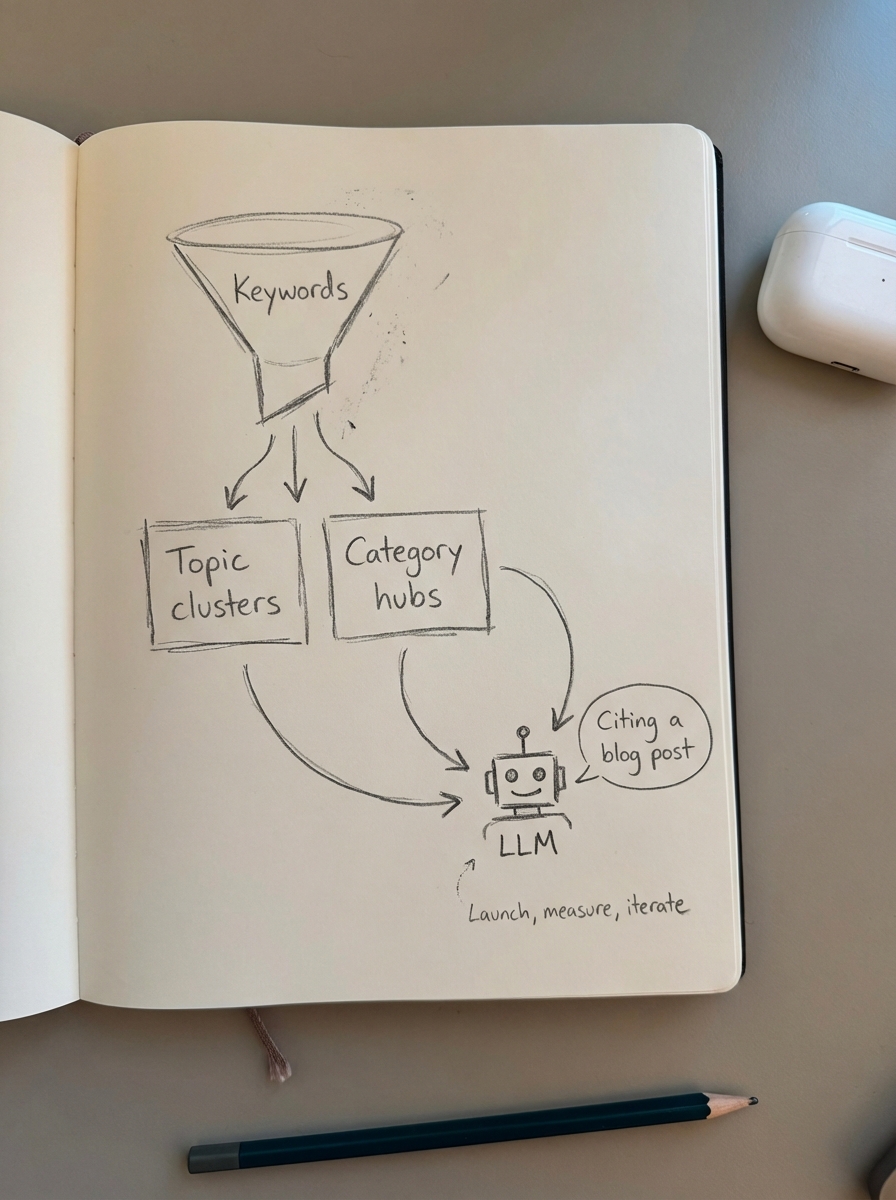

The strategic shift: Categories and blogs

In my experience, the most common technical SEO mistake is maintaining a bloated website with thousands of duplicate or near-duplicate product pages. If you want to move the needle with LLMO, you should focus your energy on category-level visibility and high-quality blog content.

It is far more important to optimize category pages than individual product pages. Most ecommerce sites use vague category names that give LLMs zero context to work with. By making category names more specific and educational, you provide a “hub” that AI models can easily parse and recommend. You can use a free category optimizer tool to identify exactly where your current naming conventions are failing to capture buyer intent.

If the basics of your store are already well-optimized, the remaining opportunity is almost entirely in producing a great blog. This isn’t about “thought leadership” that no one is searching for; it’s about creating SEO topic clusters for WooCommerce that answer specific buyer questions. An LLM is much more likely to cite a detailed blog post titled “How to Choose the Right Yoga Mat for Hot Yoga” that links to your specific products than it is to cite a standalone product page. At ContentGecko, we facilitate this by syncing your blog production to your WooCommerce catalog, ensuring every article is aware of your current SKUs and stock levels.

Technical requirements for LLM retrieval

To be cited by generative engines, your data needs to be structured properly for machines to interpret without ambiguity. LLMs favor content that they can easily verify against other sources on the web.

- Comprehensive WooCommerce structured data is mandatory. Schema.org markup tells the AI exactly what the price, availability, and brand of a product are. Without this, the AI is essentially guessing.

- Your content must be entity-centric. AI models think in “entities” – objects, people, and places. Your content should clearly define the relationship between your products (the entities) and the problems they solve for the user.

- Freshness is a major factor for AI visibility. If your price or specs change but your content doesn’t reflect it, your LLMO performance will drop as the model detects a conflict between your page and your structured data.

How to start with LLMO

I recommend an “MVP-first” approach to content: launch quickly with AI assistance, then iterate based on performance. You don’t need to over-engineer your strategy on day one.

- Group your keywords based on intent. Use SERP-based keyword clustering to find groups of related search results rather than chasing individual keywords.

- Launch AI-assisted drafts to cover your core topics. AI levels the playing field, allowing small companies to perform like giants by producing high-quality content at a fraction of the traditional cost.

- Monitor your results and iterate. Track how often you are being cited in AI responses and appearing in featured snippets. If an article starts gaining traction, go back and improve it with more expert insight or updated data.

- Conduct a regular LLMO audit to ensure your technical foundations remain aligned with how generative engines retrieve information.

Success in this new landscape requires structuring your data for LLM retrieval so that your brand remains the primary source for the AI’s answers.

TL;DR

- LLMO is an evolution of SEO that focuses on making your content citeable by AI models like ChatGPT and Google Gemini.

- Traditional SEO basics like site speed and clean architecture remain the mandatory floor for LLMO success.

- Prioritize category page optimization over individual product pages to create better “hubs” for AI discovery.

- Use a catalog-synced approach to prevent hallucinations and ensure your content always reflects live pricing and stock status.

- Build a blog focused on intent-driven topic clusters rather than generic product descriptions or unsearched thought-leadership.