Auditing and improving LLMO for WooCommerce stores

Large Language Model Optimization (LLMO) is the new frontier for WooCommerce merchants, shifting the focus from ranking in a list of blue links to becoming the primary source of truth for AI agents. The bottom line is that the era of traditional search dominance is fading; with AI overviews potentially reducing website clicks by up to 34.5%, your store must pivot to being “citation-ready” for platforms like ChatGPT, Perplexity, and Google Gemini.

![]()

This shift is accelerating rapidly. Research indicates that 58% of US consumers now use generative AI for product recommendations, and we expect traditional search usage to decline by 25% by 2026. For a growth-focused store owner, LLMO isn’t a luxury – it is the defensive and offensive strategy needed to capture the 1.1 billion queries processed daily by ChatGPT.

How to audit your store’s LLM visibility

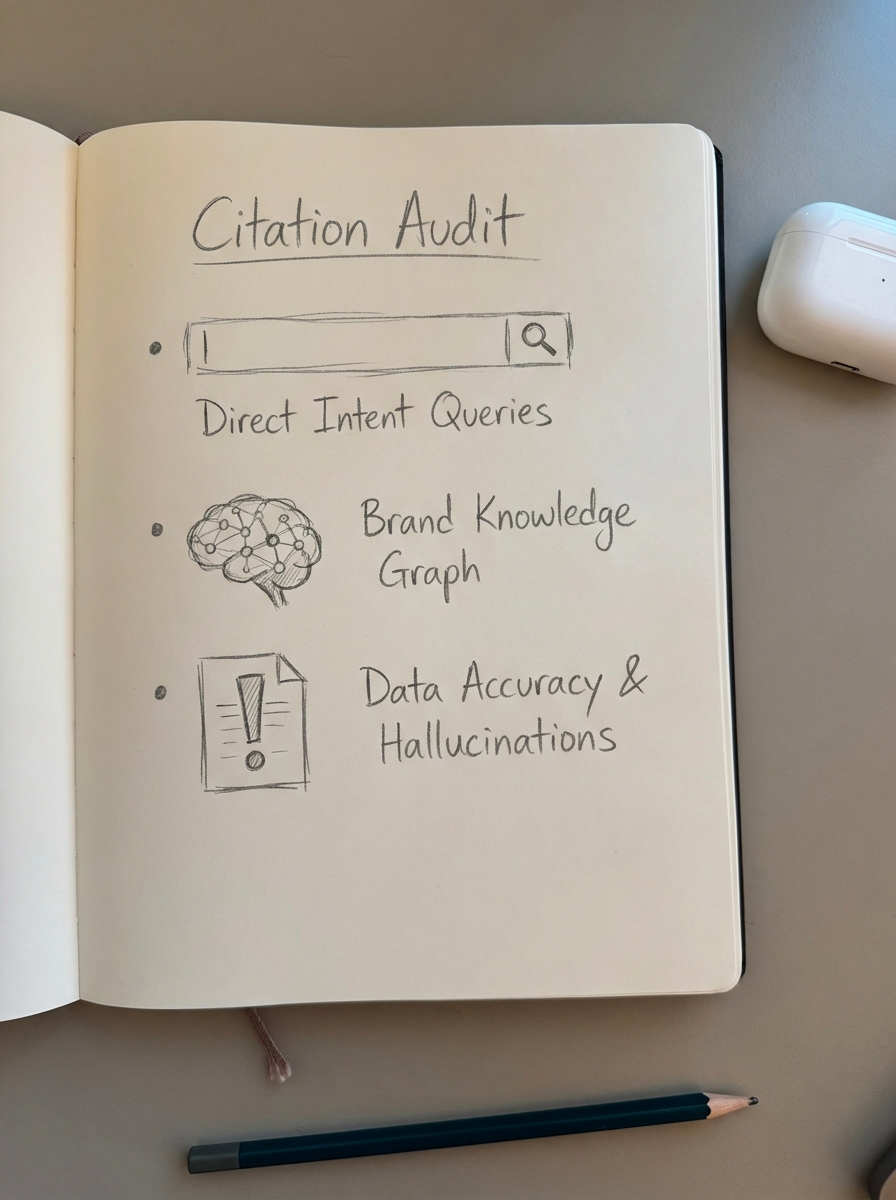

You cannot fix what you cannot measure, but LLMO auditing is far more qualitative than traditional rank tracking. I always suggest starting with what I call a “Citation Audit.” This involves moving beyond Search Console and directly querying the models to see how they retrieve and interpret your catalog data.

I recommend testing three specific layers of LLM retrieval:

- Direct Intent Queries: Ask Perplexity or ChatGPT, “What are the best [Category Name] for [Specific Use Case]?” and check if your store is cited in the sources.

- Brand Knowledge Graph: Ask the AI, “What is [Your Brand] known for?” to determine if the model understands your specific niche and topical authority.

- Data Accuracy and Hallucination: Ask, “What is the current price of [Product Name] at [Your Brand]?” to see if the model is pulling from your current WooCommerce XML sitemap or relies on stale, hallucinated training data.

If your store is missing from these answers, it usually indicates that the models find your site architecture too opaque or your data too inconsistent to “trust” for a live recommendation.

The technical foundation: Structured data and schema

The first step in optimizing for AI search engines is actually mastering the basics of traditional technical SEO. LLMs are essentially high-speed parsers that thrive on clean, structured data. We view schema as the “invisible architecture” that helps an AI model move past the “noise” of your design and straight to the facts.

For WooCommerce, you must implement a robust framework of structured schema markup that goes beyond simple product names. To truly move the needle, your store needs:

- Comprehensive Product and Offer Schema: Including price, currency, and real-time availability signals. An AI model is far more likely to recommend a product when it can verify the item is “in stock.”

- FAQ and Conversational Schema: AI assistants often “lift” direct answers from these blocks. By optimizing for conversational queries with clear question-and-answer formatting, you provide the exact snippet the model needs for a chat response.

- Review and AggregateRating Schema: Expertise and Trust (E-A-T) are major signals. High rating counts help models categorize your store as a reputable recommendation.

Properly implemented WooCommerce structured data ensures that when an AI agent “crawls” your site, it doesn’t just see text – it sees a verifiable database of products.

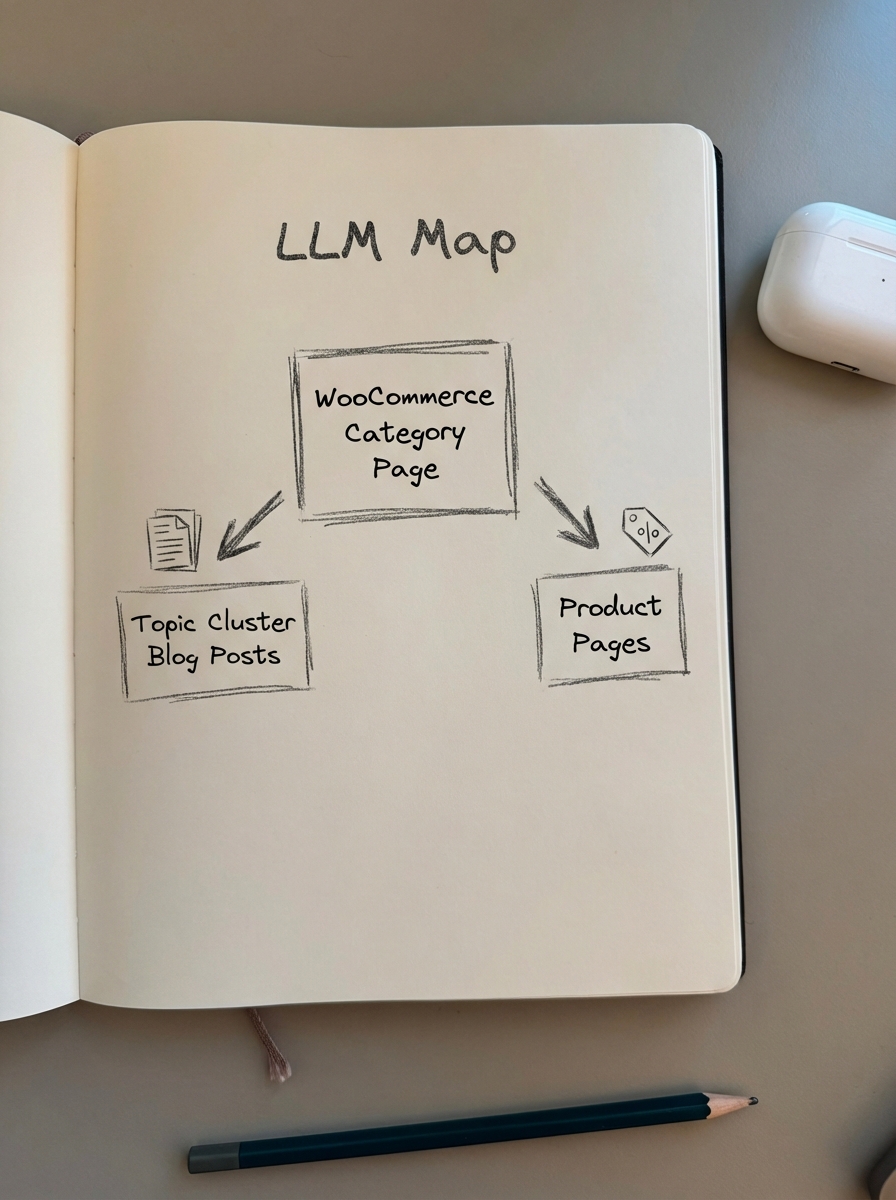

Why category pages are the LLM map

In my experience, the most common ecommerce SEO mistake is over-optimizing product pages while neglecting category pages. When it comes to LLMO, your category pages are actually the “hubs” that define your Topical Authority. They provide the context the AI needs to understand your store’s expertise.

Most stores use vague category names like “Clothing” or “Tools,” which tells an LLM almost nothing. I’ve found that using the free ecommerce category optimizer to create specific names – like “Heavy Duty Cordless Drills for Construction” – drastically improves retrieval rates.

By building WooCommerce topic clusters, you turn your category page into a semantic pillar. When this hub links out to informational blog content and specific products, it creates a knowledge graph that AI crawlers can easily digest. This allows the model to “understand” that you aren’t just selling a product; you are an authority in that specific category.

Improving LLMO through catalog-aware content

Models like Perplexity and Gemini favor content that demonstrates real-world experience. Thin, static articles are being ignored in favor of “fresh” content that reflects the current state of your business. To remain a preferred source, your content must be updated more frequently than traditional SEO might require.

Effective LLMO content update frequency is critical because AI models prioritize information that isn’t stale. If your buyer’s guide for “Best Running Shoes” was last touched in 2023, the model will likely pass you over for a competitor who updated their guide last week. Furthermore, your content must be “catalog-aware.” There is nothing that kills LLM trust faster than recommending a product that is out of stock or has a different price than what is listed on the page.

We built ContentGecko to solve this specific disconnect. By using a secure WordPress connector plugin, our platform syncs directly with your WooCommerce inventory. This ensures every piece of blog content and every buyer’s guide is automatically aware of your current SKUs, prices, and stock levels. This level of accuracy is what makes your content “citation-worthy” in a conversational search environment.

Tracking LLMO performance and ROI

Traditional rank tracking is losing its utility in an AI-first world. We are seeing a shift where large language model optimization requires an entirely new set of KPIs to justify the investment. When we monitor LLMO performance, we ignore “average position” and look at:

- Citation Frequency: How often your site appears as a cited source in generative answers.

- Content Retrieval Rate: The percentage of relevant queries that successfully surface your content in the LLM’s output.

- AI Referral Volume: Tracking traffic specifically from domains like chat.openai.com or perplexity.ai.

Early adopters of these techniques often see a 3–15% revenue lift as they capture traffic that never even reaches a traditional Google search results page. You can use our ecommerce SEO dashboard to begin segmenting this traffic and understanding the ROI of LLM optimization for your specific catalog.

TL;DR

LLMO for WooCommerce requires a fundamental shift from keyword-centric content to semantic, catalog-aware authority. Start by auditing your current brand citations in chat engines and double down on highly specific category naming. By combining robust structured data with a blog that syncs directly to your live WooCommerce inventory, you provide AI models with the reliable, fresh data they need to cite your store as the preferred recommendation for their users.