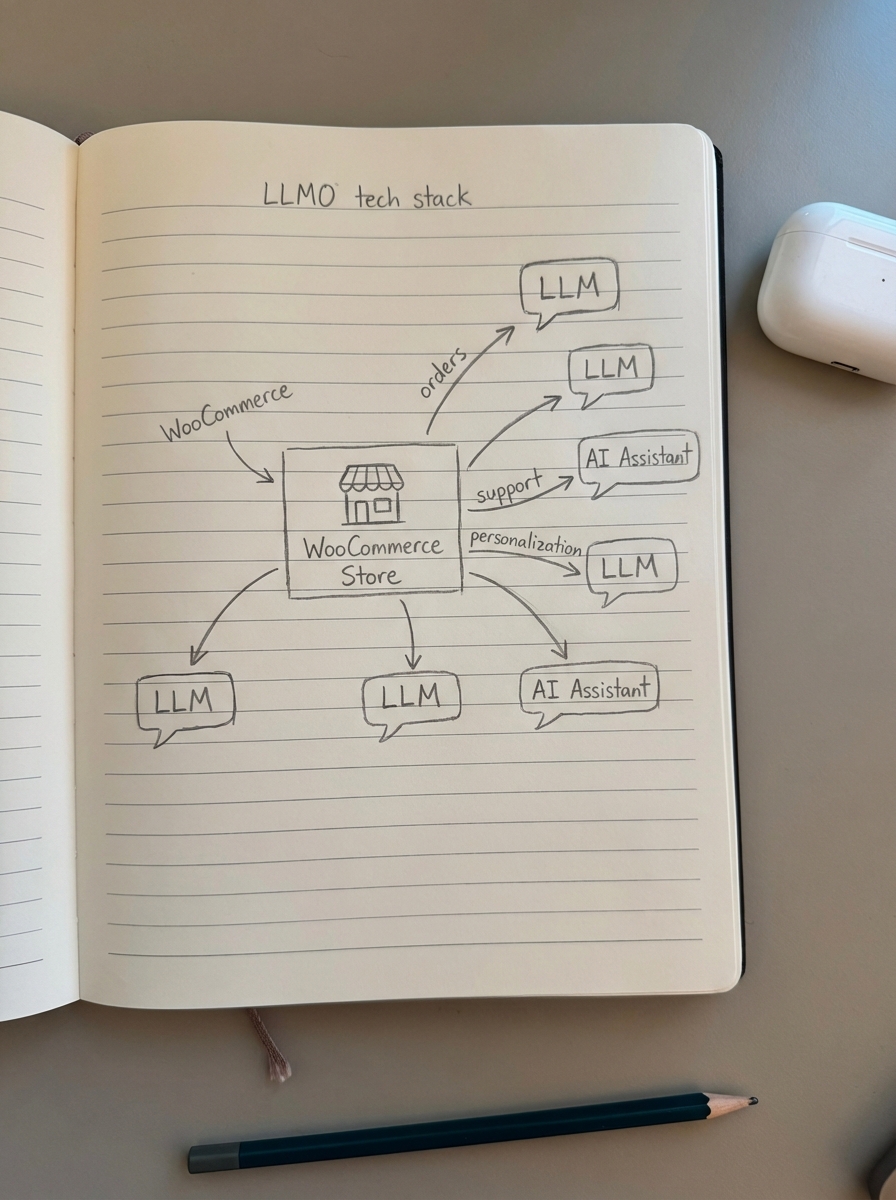

Building an effective LLMO tech stack for WooCommerce

The search landscape is undergoing its most aggressive shift since the advent of mobile. Large Language Model Optimization (LLMO) is no longer a theoretical experiment – it is a survival requirement for store owners as AI overviews begin to cannibalize traditional click-through rates by up to 34.5%.

To stay visible, you need a tech stack that does not just rank for keywords but becomes the primary source for AI agents. I have spent the last year refining how we integrate AI into ecommerce workflows, and the reality is that most “AI SEO” tools are simply gamifying old metrics. A true LLMO stack requires a shift from keyword density to semantic relevance and structuring data for LLM retrieval to ensure models can parse your catalog accurately.

LLMO vs. traditional SEO: Why your current stack is insufficient

Traditional SEO is built on a “link and rank” model. You optimize a page, Google indexes it, and a user clicks a blue link. LLMO, or Generative Engine Optimization (GEO), is built on a “synthesize and cite” model. Platforms like Perplexity, ChatGPT, and Google Gemini do not just want to link to you; they want to use your data to answer a query directly.

If your content is not formatted for large language model optimization, these agents will simply bypass you. Traditional SEO tools are great for finding historical volume, but they fail to capture the conversational intent and ROI of LLM optimization that defines AI search. We have found that the first step to LLMO is getting traditional SEO right. You cannot optimize for an LLM if the underlying technical foundation is broken, but once the basics are set, you need a stack that handles the heavy lifting of semantic clustering and automated updates.

The essential components of an LLMO tech stack

An effective LLMO stack for WooCommerce must solve three specific problems: data structure, semantic content creation, and real-time catalog synchronization. This requires moving away from static pages toward a dynamic knowledge base that an AI can query.

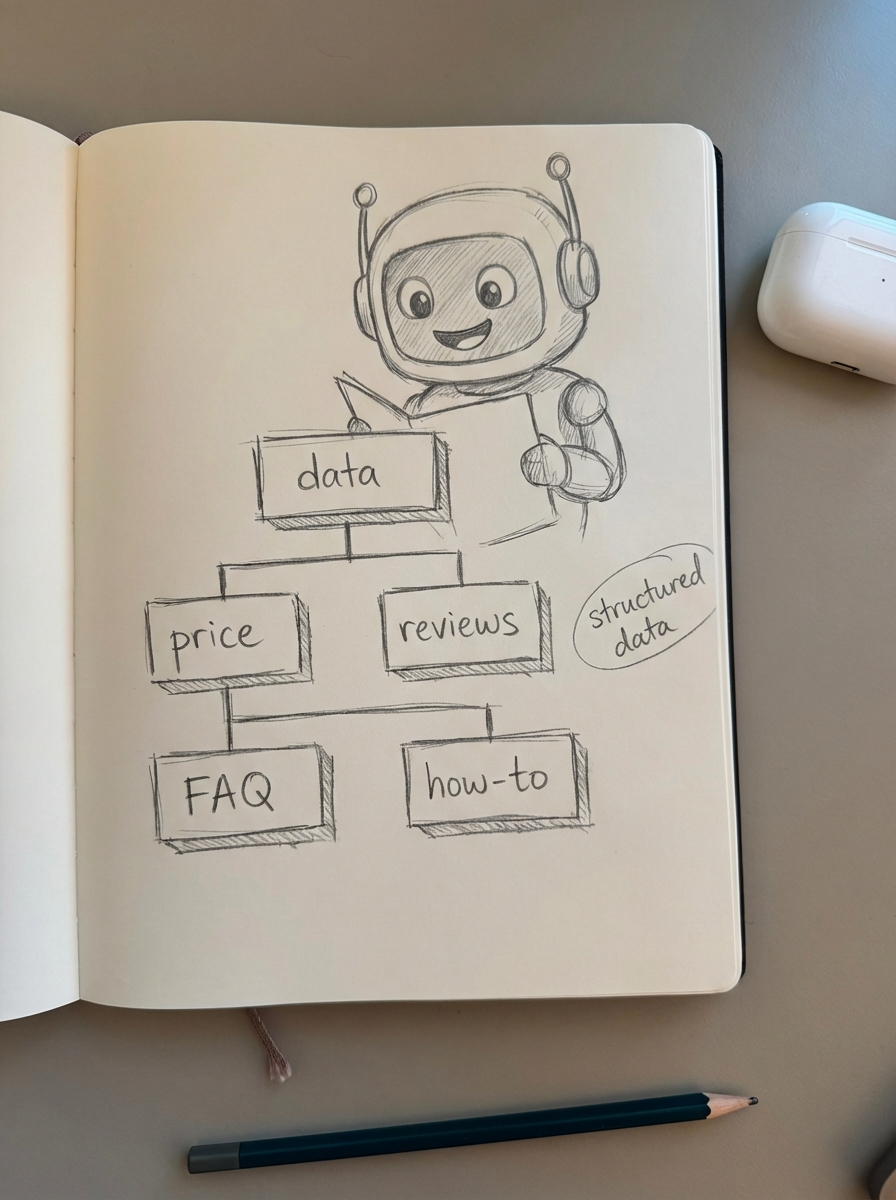

The technical foundation: Structured data and Schema

LLMs rely heavily on structured data to interpret the relationship between products, prices, and intent. For WooCommerce, a plugin is the bare minimum requirement to handle basic JSON-LD schema – such as Product, FAQ, and Review tags – that helps AI agents parse your site.

Generic schema is rarely enough to win. To truly dominate in LLM search, you must provide context-rich data. I focus on effective metadata strategies that go beyond the SKU, including “how-to” guides and comprehensive comparison data that AI agents love to cite. Think of your schema as the “invisible architecture” that tells an LLM exactly what your content is about without it having to guess.

The intelligence layer: Semantic clustering and research

You have to stop looking at individual keywords and start looking at topics. LLMs understand semantic relationships, not just character strings. If you are still trying to rank one page for one keyword, you are already losing to competitors who cover entire topical maps.

I use our free SERP keyword clustering tool to group keywords by search result similarity. This prevents cannibalization and identifies the gaps in your topical authority. If an LLM sees you have dozens of high-quality, interlinked articles on a specific niche, it is far more likely to cite you as an expert source when a user asks for a recommendation.

The execution layer: ContentGecko for automated LLMO

High-quality LLMO content requires constant updates to remain reliable. If your product price changes or a SKU goes out of stock while your blog post still references the old data, an LLM may flag your content as inaccurate and stop citing it.

We built ContentGecko to solve this specific bottleneck. It functions as an automated SEO content platform that connects directly to your store. Through our WordPress connector plugin, the platform maintains a live sync with your catalog. It produces conversational, citation-ready guides that answer the questions people are actually asking ChatGPT, and it automatically refreshes the content whenever your prices or inventory levels shift.

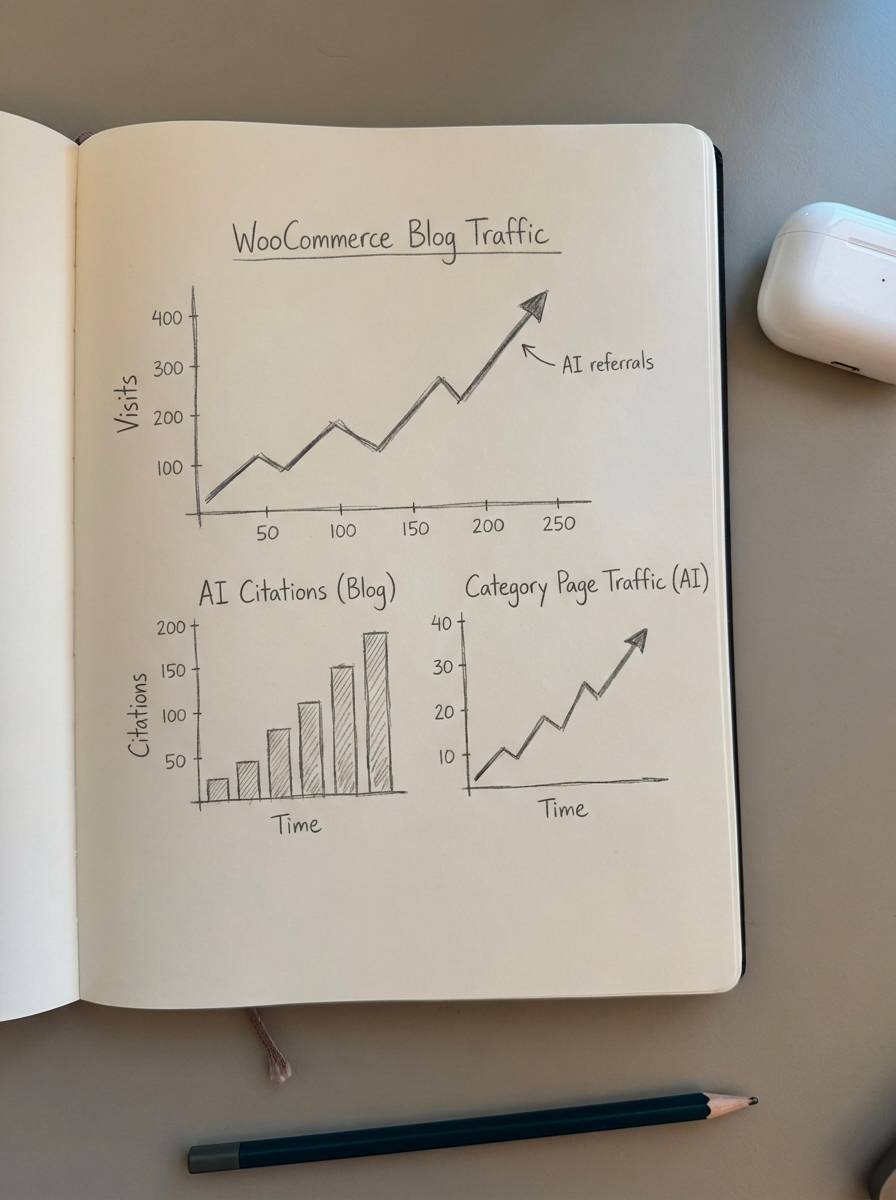

The monitoring layer: Tracking citations and AI traffic

You cannot track LLMO success with traditional rank trackers. You need to look at citation frequency and AI-driven referral traffic. Traditional metrics like keyword rankings are declining in relevance as users shift toward direct answers.

Our ecommerce SEO dashboard allows you to segment your traffic by page type – category, product, or blog. In the age of LLMO, your blog and category pages will likely see the highest increase in traffic, as these pages provide the “why” and “how” that LLMs use to educate users before they reach the point of purchase.

Concrete tools to include in your stack

If I were building a WooCommerce LLMO stack from scratch today, I would prioritize a lean selection of tools that work together without creating a bloated site.

- Platform: WooCommerce.

- Technical SEO: WooCommerce SEO tools like Rank Math PRO for advanced schema and variation mapping.

- Content Research: Perplexity AI for real-time intent research combined with free SERP keyword clustering.

- Content Production: ContentGecko Managed Service to handle the planning, writing, and catalog-synced updates.

- Analytics: Google Search Console (filtered for AI referral URLs) and the ContentGecko dashboard.

- Visuals: A WooCommerce product image generator to create high-quality, contextual lifestyle images that AI search engines increasingly surface in multimodal results.

Addressing the “AI content is spam” objection

I hear the concern constantly that Google will penalize a site for using AI. The reality is that Google penalizes low-value content, regardless of whether a human or a machine wrote it. Content that is purely keyword-stuffed for traditional search will fail in an LLM world because it lacks the conversational depth required for citation.

The goal of LLMO is to produce authoritative, conversational content that genuinely helps the user. When we use automation to write a buyer’s guide, we are synthesizing catalog data into a format that provides more value than a standard product description. This is why many stores see measurable growth through AI-optimized content – it solves the user’s problem faster than a list of links ever could.

TL;DR

To build an effective LLMO tech stack for WooCommerce, move beyond basic SEO plugins. Use technical SEO tools for your schema foundation, leverage ContentGecko to automate catalog-synced content production and semantic clustering, and monitor success via citation frequency and AI referral traffic. The future of search is not about being the first link; it is about being the most trusted answer.