Adapting website architecture for LLM search

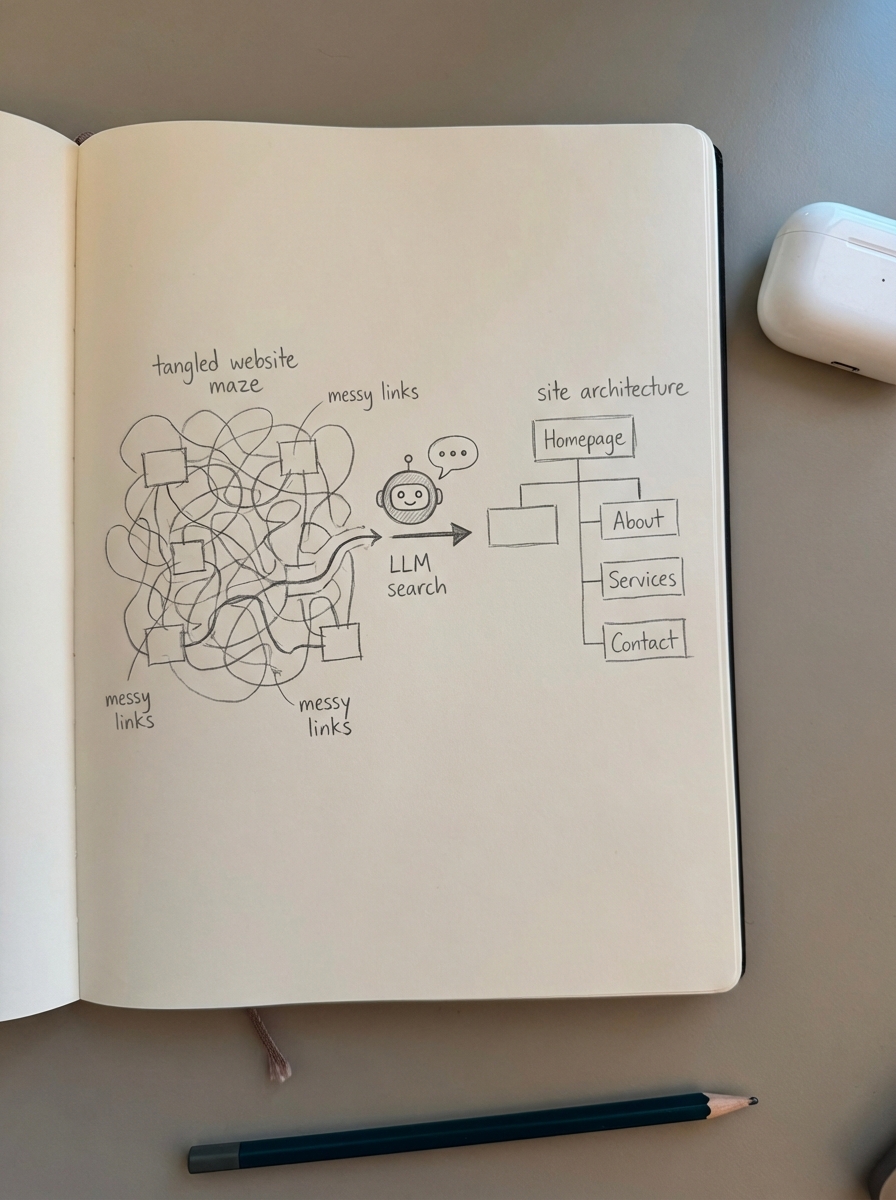

If your website architecture is a labyrinth, no large language model (LLM) will bother summarizing it for your potential customers. Visibility in modern search is no longer just about ranking in the top ten blue links; it is about being the primary source cited in a ChatGPT response or a Perplexity answer. To achieve this, you must shift your focus from keyword density to data retrieval efficiency.

The foundation of LLM optimization is traditional SEO

I often hear the objection that AI search makes traditional technical SEO obsolete. This is a mistake. In my experience, the first step in optimizing for AI search engines is getting your traditional technical foundation right. If your site is slow or plagued by crawl errors, an LLM crawler like GPTBot or OAI-SearchBot will treat your domain as low-quality noise. I believe that LLMO is not a replacement for SEO, but rather a layer that stands between visibility and digital obscurity in an AI-heavy world.

Transitioning from traditional SEO to LLMO frameworks requires a layered approach. You still need a mobile-first design, fast load times with an LCP under 2.5s, and a clean WooCommerce XML sitemap. However, the priority shifts from simply pleasing a ranking algorithm to enabling a data retriever. LLMs can process schema markup up to 10x faster than unstructured HTML. If you are not using comprehensive schema, you are essentially making the AI work harder than it needs to, which drastically reduces your chances of being cited.

Flattening site architecture for retrieval efficiency

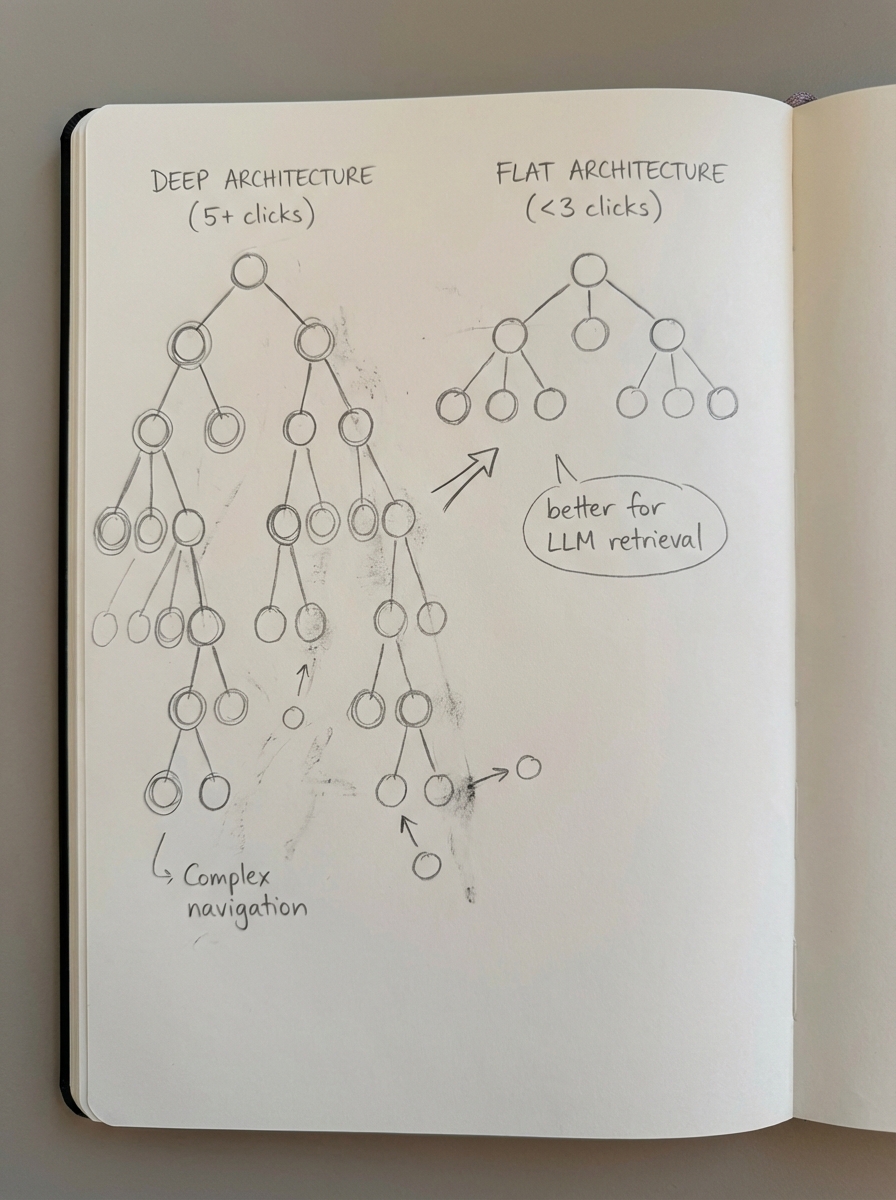

In traditional SEO, we often build deep hierarchies to manage large catalogs. For LLMs, I recommend a flatter SEO site structure where important informational hubs are no more than three clicks from the homepage. When an LLM performs a retrieval-augmented generation (RAG) task, it looks for manageable “chunks” of information. If your high-value content is buried under five layers of WooCommerce faceted navigation, the crawler may never reach the depth required to index the full context of your offering.

We have found that structuring data for LLM retrieval works best when you prioritize subfolders over subdomains. Since subfolders consolidate link equity, the entire domain becomes more “trustworthy” in the eyes of an LLM, whereas subdomains can dilute your authority across multiple “accounts.” This consolidation should be paired with a logical WooCommerce URL structure that includes category context without excessive nesting.

I also argue that most ecommerce sites should focus far more on educational category pages than individual product pages. Most stores use vague category names, but optimizing category names to be more specific and buyer-friendly helps LLMs map your products to broader user intents. A well-optimized category page provides the educational context – like buying guides and usage scenarios – that LLMs need to provide a helpful answer to a user query.

Content format changes for conversational queries

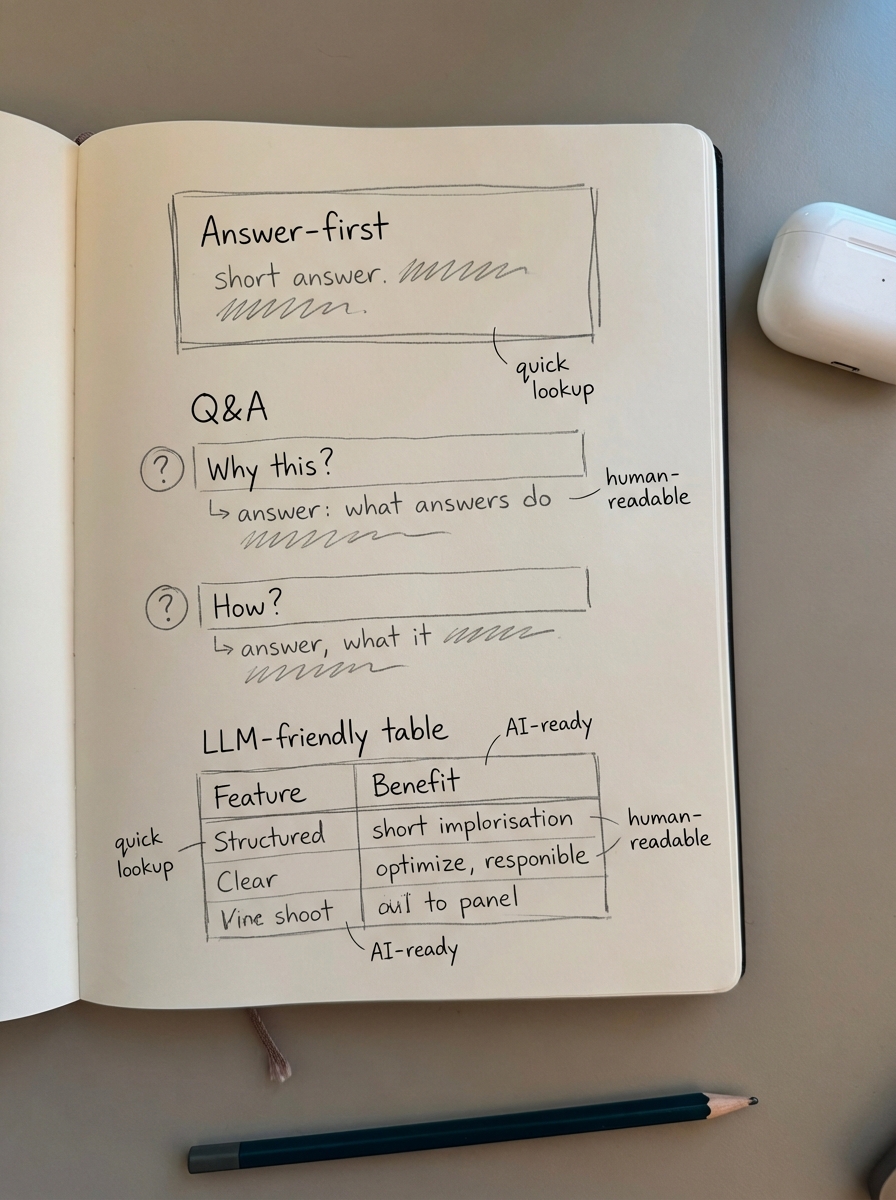

LLM search behavior is fundamentally different from traditional keyword entry. Users are no longer typing “best espresso machine”; they are asking, “What is the best espresso machine for a small kitchen under $500 that is easy to clean?” To capture this traffic, you must shift to optimizing content for conversational queries. This means moving away from generic marketing fluff and toward an “Answer-First” structure that prioritizes directness.

Strategic content format changes for LLMO should involve restructuring your blog and product pages to be highly “extractable.” You should lead with direct Q&A sections where H2 or H3 headers are formatted as natural questions followed by a concise 50-word answer. Furthermore, LLMs excel at processing comparison tables. A table comparing your top three SKUs by price, wattage, and warranty is far easier for a bot to synthesize than three separate paragraphs of text.

Finally, you should stop chasing individual keywords based on third-party data, as those databases are often too small to be accurate. Instead, build your content around entity-centric clustering. If you sell coffee, your content should link entities like “Arabica beans” and “Roasting profiles.” At ContentGecko, we help merchants automate this through our WordPress connector plugin, which syncs your live inventory with your blog to ensure every guide is catalog-aware and contextually relevant.

Effective metadata and schema strategies

Metadata is the invisible architecture that tells an LLM what a page is actually about. By 2026, I believe nobody should be manually writing meta descriptions because Google and other LLMs will likely rewrite them anyway. Your time is better spent on effective metadata strategies for LLMO, specifically focusing on standardized document structures and semantic tagging.

The most critical technical element in this shift is structured schema markup. For a WooCommerce store, you need more than just basic product schema. I recommend implementing:

- FAQPage schema to explicitly map out common customer questions and their corresponding answers.

- HowTo schema for any technical guides, assembly instructions, or usage tutorials.

- Organization and sameAs properties to link your brand to established entities like social profiles, helping LLMs place you accurately within their knowledge graph.

Research indicates that sites with properly configured JSON-LD see citations appear up to four weeks faster in AI-driven tools compared to sites relying on organic crawling alone.

Monitoring and testing LLMO performance

Traditional metrics like “keyword position” are becoming less relevant as search results become personalized and dynamic. Instead, you need to conduct regular LLMO audits and testing to evaluate your citation frequency and brand mentions. This involves checking how often your brand is cited in responses from models like Perplexity, Gemini, or ChatGPT.

You should also use tools for monitoring LLMO performance to track retrieval rates and hallucination patterns. For instance, if an AI misrepresents your pricing or specs, it is often a sign that your data architecture – such as your WooCommerce pagination or faceted URLs – is broken, leading the model to see conflicting or outdated information.

If your store is missing from AI answers, it is a signal that your technical SEO checklist is incomplete. Success in this era is not just about being found; it is about being the most reliable source for the LLM to summarize.

TL;DR

To adapt your WooCommerce store for LLM search, you must prioritize structured data and retrieval efficiency over keyword density. Move toward a flat site architecture, consolidate authority using subfolders, and implement comprehensive JSON-LD schema to help bots index your data faster. Restructure your content into conversational, answer-first formats and focus on entity clustering rather than inaccurate third-party keyword data. Success in the AI era is measured by your “share of answer,” so use automation tools like ContentGecko to ensure your product catalog is always represented by high-quality, citation-worthy content.