Multilingual LLMO: Navigating the technical challenges of global AI search

Scaling a multilingual WooCommerce store used to be a massive manual burden; today, Large Language Models (LLMs) make translation incredibly cheap, but they introduce a new category of technical debt characterized by “translationese,” terminological drift, and cultural misalignment.

I have seen many merchants assume that because GPT-4o or Claude 3.7 can speak 100 languages, they can simply pipe their product catalog through an API and call it a day. In reality, without a sophisticated optimization framework, you risk creating a site that feels “uncanny” to native speakers and invisible to the generative engines that now drive product discovery. Success in global e-commerce now requires moving beyond simple word substitution toward a strategy that prioritizes semantic intent and cross-locale consistency.

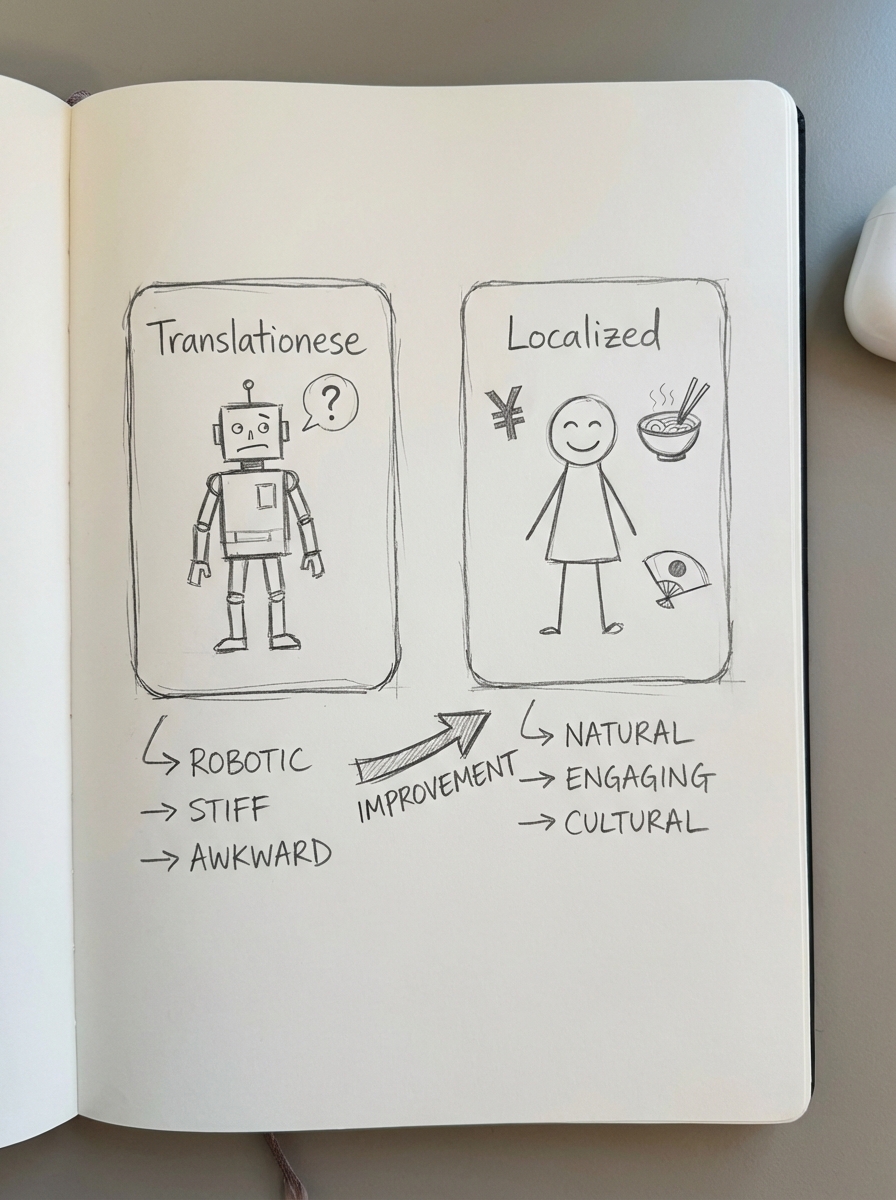

The “translationese” trap in low-resource languages

LLMs are notoriously biased toward high-resource languages like English, Spanish, and French. When you push them to generate content in low-resource languages – such as Vietnamese, Swahili, or specific regional dialects – the models often fall back on “translationese.” This results in grammatically correct but culturally sterile text that fails to resonate with the target audience.

I have observed this lead to significant drops in conversion rates for otherwise high-performing stores. Research into LLM-generated translations across 24 regional dialects found that while models are grammatically competent, they frequently miss the mark on cultural nuance. For a WooCommerce merchant, this means your product descriptions might technically describe the item but fail to trigger the emotional “buy” signals specific to that culture. If you are expanding into a new market, I recommend first adapting your WooCommerce international SEO strategy to ensure your technical foundation is as strong as your localized content.

Terminological drift and brand consistency

One of the hardest technical challenges in multilingual LLMO is maintaining consistency across different locales. In a standard automated workflow, an LLM might translate “Shipping” as “Envío” on your homepage but use “Logística” on a product page. For a large-scale WooCommerce store with thousands of SKUs, this fragmentation ruins the user experience and confuses the AI crawlers that are trying to build a knowledge graph of your site.

Traditional Machine Translation (MT) engines use static glossaries, but LLMs require dynamic context to function effectively. Without structuring your data for LLM retrieval, the model has no centralized “source of truth” to reference during the generation process.

We frequently see the following failure modes in unoptimized multilingual setups:

- Inconsistent naming where different terms are used for the same SKU or feature across various languages.

- Tone misalignment, such as using a formal register in German while accidentally adopting a casual “street” tone in Japanese because the prompt didn’t specify honorific levels.

- Lost variables where LLMs “translate” code snippets, shortcodes, or pricing placeholders (like turning

[price]into[precio]), which can break the functionality of your WooCommerce store.

Managing hallucinations in technical specifications

Hallucinations remain the most significant risk for e-commerce brands using generative AI. An LLM might confidently “invent” a product feature in Italian that does not exist in the original English source. In my experience, AI hallucinates only if your prompt is missing important context or if your large language model optimization settings, specifically “temperature,” are tuned too high for factual content.

To mitigate this risk, we utilize an “LLM Council” approach. This involves a multi-stage workflow: one model performs the initial translation, a second model audits that translation against the source for factual discrepancies, and a third model localizes the tone for the specific market. Implementing this type of content quality assurance process significantly reduces the need for human intervention while keeping the content safe for publication.

Why traditional metrics like BLEU and COMET are failing

For years, the industry relied on BLEU (Bilingual Evaluation Understudy) scores to judge machine translation quality by measuring how closely the output matched a human reference. However, for modern LLM-based optimization, BLEU is nearly useless.

LLMs often produce translations that are actually better than the human reference because they are more conversational or contextually aware. This often results in a “poor” score on traditional metrics despite providing a superior user experience. Instead of relying on n-gram overlap, we are seeing a shift toward more sophisticated evaluation methods:

- Semantic Similarity: Using vector embeddings to measure if the core intent of the content remains identical across languages.

- LLM-as-a-Judge: Utilizing a superior model to grade translations based on specific rubrics like “Fluency,” “Accuracy,” and “Brand Voice.”

- Conversion Parity: Tracking whether the localized page converts at a similar rate to the original language version, which remains the ultimate metric for any merchant.

Best practices for multilingual LLMO

To future-proof your multilingual site, you must adapt your architecture for both human shoppers and the generative engines that index your store.

- Use “Reflection” prompts: Do not simply ask for a translation. Instruct the model to translate a product description, review its own work for cultural idioms, and finally rewrite it to sound like it was written by a local expert.

- Implement RAG-infused glossaries: Use Retrieval-Augmented Generation to feed your brand’s specific terminology into the context window before every translation task to ensure “Free Shipping” is always translated consistently.

- Align Hreflang and LLM signals: Ensure your hreflang tags and canonicals are perfectly aligned. Search engines use these technical signals to understand the relationship between your localized pages and to serve the correct version in AI Overviews.

- Leverage catalog-aware automation: For WooCommerce stores, your content platform must be synced with your live catalog. If a price or SKU changes in your primary language, the AI should automatically trigger an update across all localized versions.

At ContentGecko, we simplify this complexity by syncing directly to your WooCommerce catalog. This ensures that whether you are publishing in one language or ten, your product data remains factual and your internal linking remains semantically relevant across every locale.

TL;DR

- Low-resource languages carry high risks for cultural “uncanniness”; utilize “LLM Council” audits to maintain quality.

- Prevent terminology drift across locales by using RAG systems and structured metadata.

- Reduce hallucinations by keeping temperature settings low and providing the AI with sufficient context through your prompts.

- Move away from outdated BLEU scores and adopt semantic similarity and LLM-based grading to judge quality.

- Use catalog-synced tools to ensure that when your product attributes change, your multilingual blog and category pages update automatically.