Content format changes for LLMO

The search landscape is shifting from a list of blue links to synthesized AI answers, meaning your visibility now depends on being cited as a primary source by models like Perplexity, ChatGPT, and Google’s AI Overviews. To stay relevant, you must transition from a keyword-first strategy to an intent-first strategy that prioritizes semantic depth and machine readability.

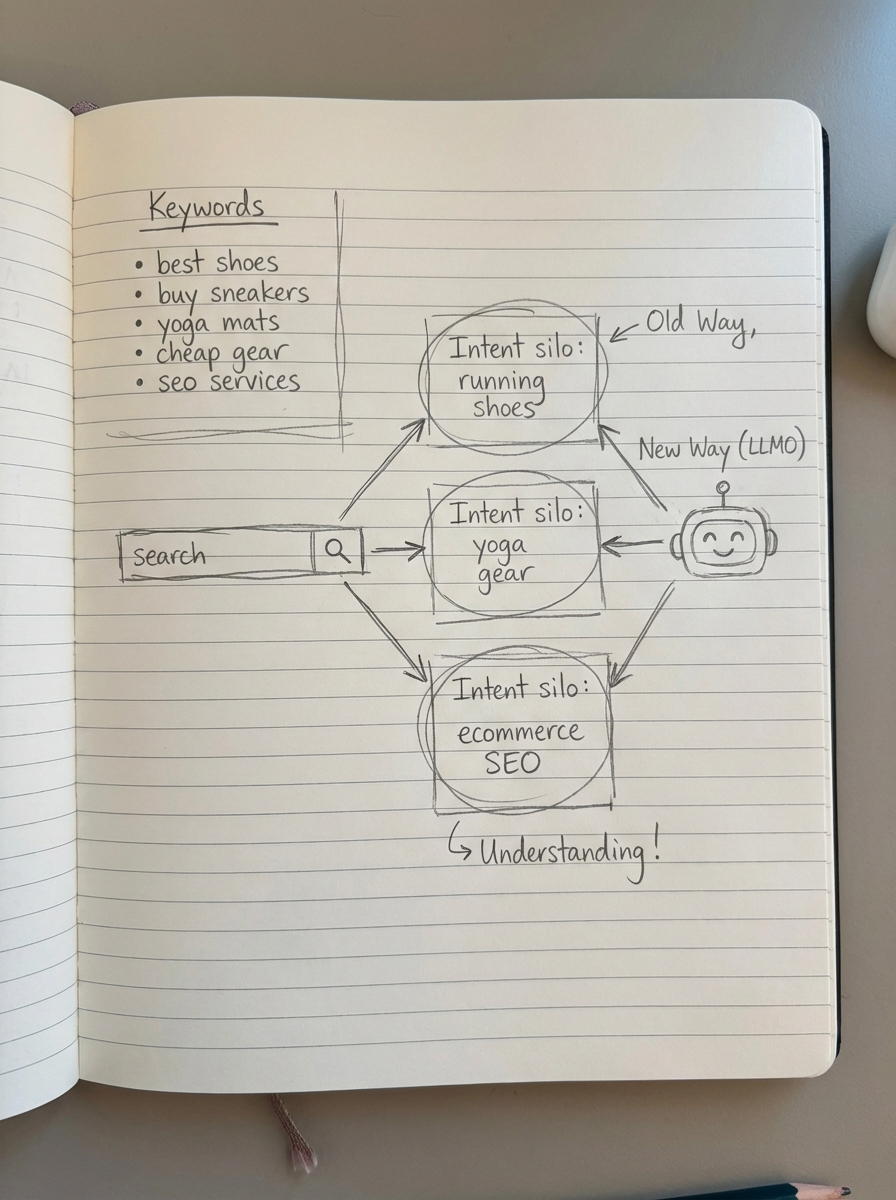

The shift from keyword lists to intent-based roadmaps

In traditional SEO, we used to build roadmaps based on search volume and competition metrics. In the era of Large Language Model Optimization (LLMO), search volume is becoming a secondary indicator. LLMs prioritize content that comprehensively solves a specific user problem or answers a complex, multi-part question rather than just matching a high-volume string of text.

I’ve found that the most effective roadmaps now focus on “intent silos” rather than isolated keywords. For example, instead of just targeting “running shoes,” your roadmap should cluster content around specific needs like “best running shoes for flat feet under $200” or “how to prevent shin splints while marathon training.” These mid-funnel, conversational queries are exactly what LLM search engines look for when synthesizing a comprehensive answer.

We recommend using a free SERP keyword clustering tool to group your ideas logically. This ensures you aren’t creating duplicate content that confuses the AI, but rather a cohesive knowledge base that establishes topical authority. Traditional keyword data is often too small to represent the real opportunity; focusing on intent ensures you capture the conversational traffic that LLMs are built to serve.

Adapting content formats for AI synthesis

AI models don’t “read” like humans; they parse data for entities, relationships, and direct answers. If your content is buried in 3,000 words of fluff, an LLM is likely to skip it in favor of a competitor who gets to the point quickly. By the time 2026 rolls around, nobody should be writing meta descriptions – Google will rewrite them anyway – so your focus must be on the actual structure of the page content.

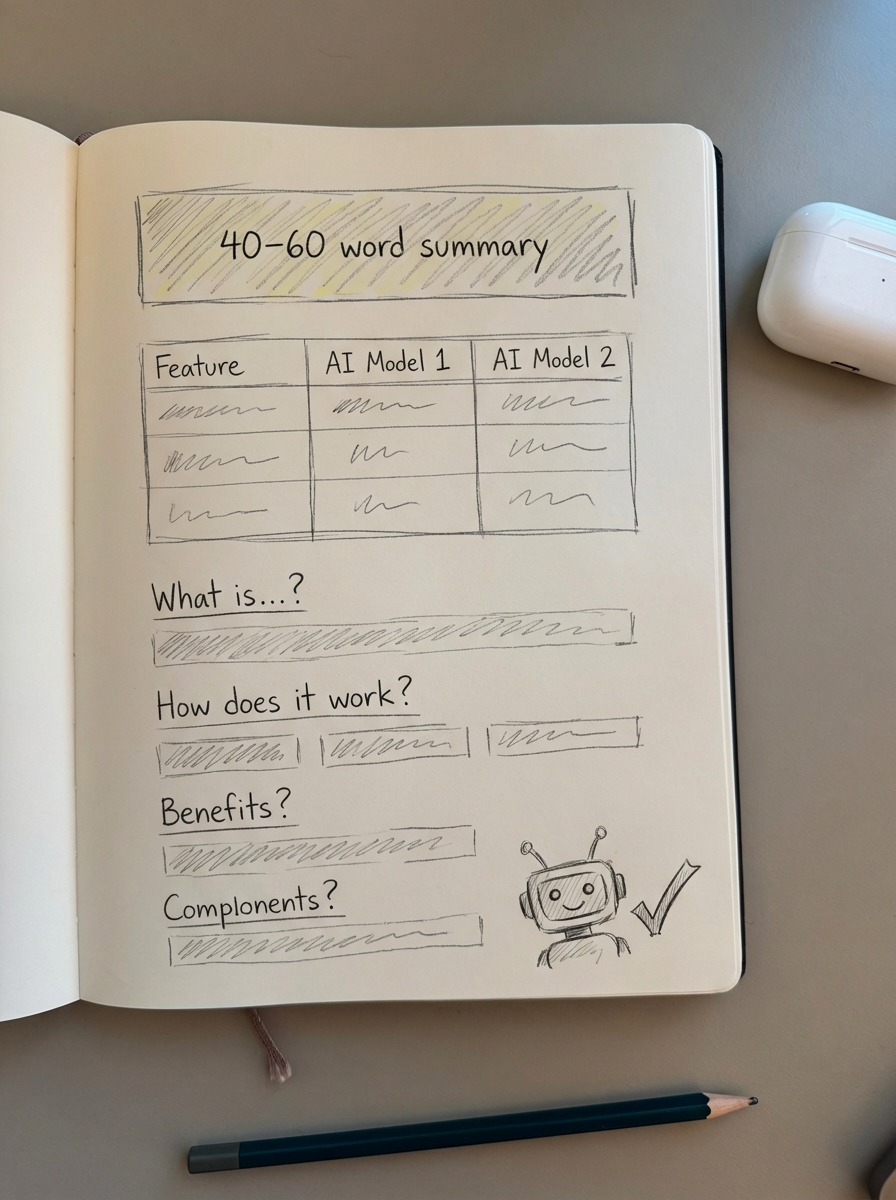

The 40-60 word summary

Every major section of your content (H2s and H3s) should ideally start with a 40–60 word summary that directly answers the heading’s question. This “bottom-line-up-front” approach mirrors how AI models extract snippets for their answers. If I can’t summarize the point in two sentences, the AI probably won’t be able to summarize it for the user either. This structure helps the model identify the core claim immediately before it looks for supporting evidence in the subsequent paragraphs.

Comparison tables and structured data

LLMs love structured data because it eliminates ambiguity. If you are comparing three WooCommerce plugins or five pairs of boots, don’t just write paragraphs. Use a comparison table with clear <th> and <td> tags. Research on how to get traffic from ChatGPT indicates that pages with clear comparison tables are cited significantly more often by research-oriented AI engines like Perplexity. It allows the model to “see” the comparative relationships between features and prices without having to infer them from prose.

Conversational Q&A structure

Shift your headings from static phrases like “Product Features” to conversational questions such as “What are the benefits of [Product] for [Target Audience]?” This format aligns with how users interact with chatbots. We’ve seen that adapting website architecture to follow a Q&A hierarchy dramatically improves content retrieval rates in RAG (Retrieval-Augmented Generation) systems. It effectively prepares your site to be the “knowledge base” that the AI pulls from during a live chat session.

Leveraging your WooCommerce catalog for LLMO

For ecommerce stores, your product catalog is your biggest asset for LLMO. However, 68% of WooCommerce merchants struggle with thin product pages and duplicate content, which are toxic for AI training. If the AI sees the same generic description everywhere, it has no reason to prioritize your domain as a citation source.

To surface in AI answers, your blog content must be “catalog-aware.” This means your how-to guides and listicles shouldn’t just link to products; they should pull real-time data like price, availability, and specific attributes such as “waterproof” or “sustainable.” We often see better results when stores optimize their category pages more heavily than individual product pages, as categories provide the broader context LLMs need to categorize your brand.

At ContentGecko, we solve this by using a WordPress connector plugin that syncs your WooCommerce inventory with your content engine. This ensures that when an AI engine looks for the “best eco-friendly yoga mats,” your content provides accurate, up-to-date data that makes it an easy choice for the AI to recommend. Providing this factual grounding prevents the LLM from hallucinating incorrect prices or stock status.

Technical foundations: Schema as the invisible architecture

If content is the “what,” schema markup is the “how” for LLMs. You cannot ignore structuring data for LLM retrieval if you want to be cited as an authority. Schema provides the explicit signals that allow machines to understand the context of your content without the risk of misinterpretation.

- Product Schema: This is essential for ecommerce. Using JSON-LD for WooCommerce structured data can increase CTR by 23% in rich results by providing the AI with clear pricing and availability signals.

- FAQ Schema: This serves as a direct signal to LLMs about the specific questions your page is qualified to answer.

- Review Schema: Visible star ratings and verified purchase indicators help LLMs determine the “trustworthiness” of your recommendations, which is a key factor in citation selection.

The goal is to provide an effective metadata strategy that removes all guesswork for the LLM crawler, ensuring your products are correctly mapped to user needs.

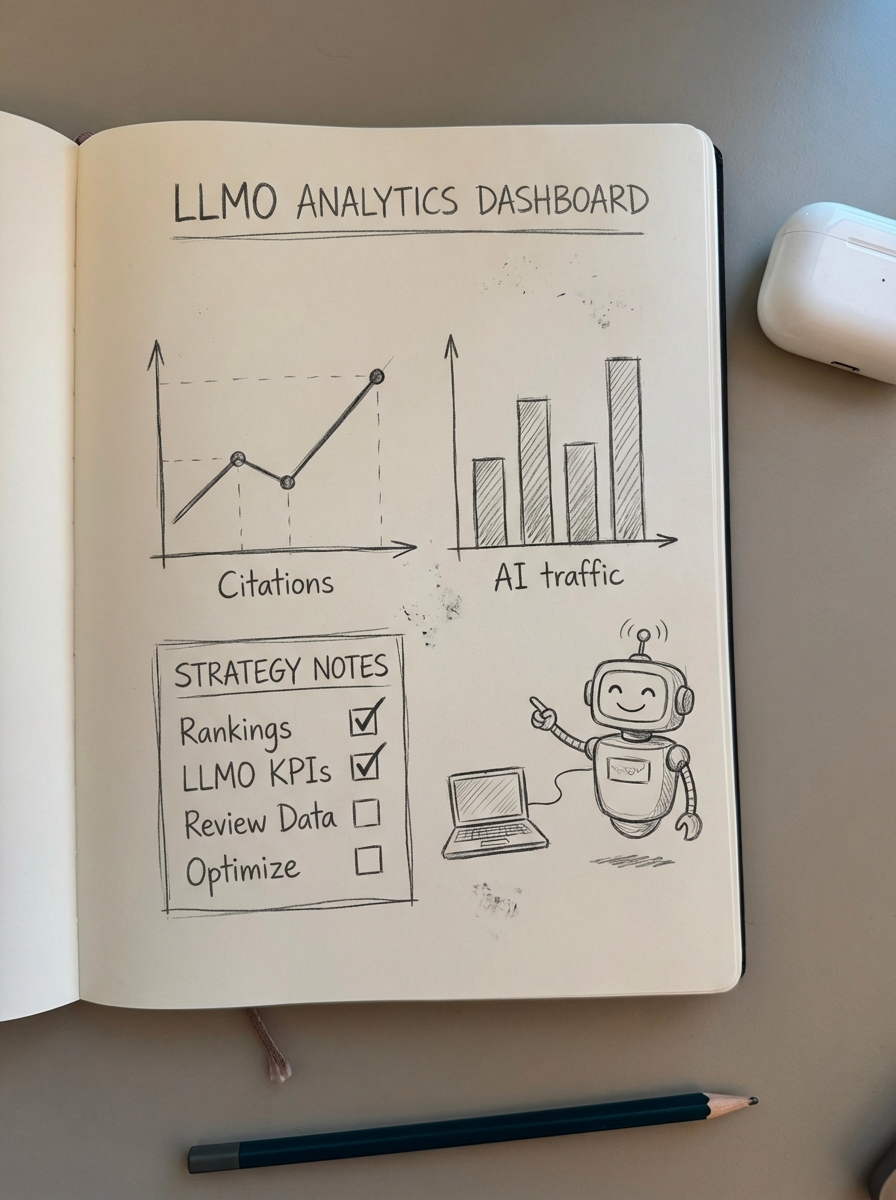

Testing and monitoring LLMO performance

Traditional rank tracking doesn’t tell the whole story anymore. You might rank #1 for a keyword but never appear in the Google AI Overview for that same query. Because SEO tools often operate on gamification rather than actual results, you need to shift your focus to how models are actually synthesizing your information.

You should implement LLMO testing by monitoring these specific KPIs:

- Citation Frequency: Track how often your brand or specific URLs are mentioned as sources in ChatGPT, Claude, or Perplexity responses.

- Content Retrieval Rate: Measure how often your content is used to ground an AI’s answer, particularly in RAG-based systems.

- AI Referral Traffic: Use UTM parameters or specialized ecommerce SEO dashboards to track clicks coming specifically from generative AI engines.

By comparing traditional SEO vs. LLMO metrics, you can see where your content is winning in the “blue link” world versus where it is successfully feeding the AI ecosystem.

TL;DR

- Move to intent clusters: Stop chasing individual keywords and start building thematic authority with intent-based silos that solve user problems.

- Format for machines: Use the 40-60 word summary rule, include comparison tables, and adopt a conversational Q&A heading structure for better parsing.

- Sync your catalog: Ensure your blog content is aware of your WooCommerce inventory to provide the factual grounding AI models crave for recommendations.

- Double down on Schema: Use comprehensive JSON-LD to create an invisible map for LLM crawlers, prioritizing product and FAQ types.

- Track citations: Shift your reporting from simple rankings to AI mentions and referral traffic from generative engines to measure true visibility.