Measuring user intent in LLMO search experiences

User intent in the era of AI search is no longer a static keyword category; it is a dynamic, conversational journey that requires a total shift from matching strings to mastering semantic context. In LLM-based search, intent is inferred through multi-step reasoning and dialogue, meaning your content must prove its relevance to an AI’s logic rather than just a search engine’s crawler.

![]()

The fundamental shift from keywords to conversational contexts

In traditional SEO, we have spent a decade classifying intent into four buckets: informational, navigational, transactional, and commercial. While these still exist, the way Large Language Models (LLMs) like Perplexity or Google’s AI Overviews process them is fundamentally different. Traditional search returns a list of links based on a query, but large language model optimization targets systems that engage in a dialogue to synthesize an answer.

For example, a traditional search for “best running shoes” might show a list of top-ten articles. An AI-assisted search for “What are the most cushioned running shoes for marathon training if I have flat feet?” requires the model to understand the relationship between “cushioning,” “marathon distance,” and the physical entity of “flat feet.”

I have seen WooCommerce merchants struggle with this transition because their product pages are often too thin to satisfy this level of inquiry. If your content does not provide the “why” behind a product’s features, an LLM will simply skip over you in favor of a site that explains those nuances. This is a critical gap to close, as research shows that AI overviews can reduce website clicks by up to 34.5%, necessitating a shift in how we present information.

Defining intent categories in the AI era

When we look at comparing traditional SEO vs LLMO techniques, we see that intent is becoming more agentic. Users expect the AI to do the heavy lifting of research and comparison. This results in three evolving categories of intent that go beyond the basic transactional or informational labels:

![]()

- Investigative intent: The user is looking for a solution to a multi-variable problem. They might ask, “Is this coffee machine better than others under $200 for a small office?” This combines price constraints, a specific use-case, and a need for comparative analysis.

- Evaluative intent: Users provide specific constraints and expect the AI to filter options. In ecommerce, this often involves optimizing content for conversational queries where the user asks the AI to find a product that meets conflicting needs, such as a professional-looking jacket that is durable enough for hiking.

- Procedural intent: This is a highly specific “how-to” request. Instead of a general guide, a user might ask how to install a specific WooCommerce plugin and sync it with their inventory without breaking a custom theme. These queries require the AI to find structured data for retrieval to provide a step-by-step resolution.

How to measure user intent in AI-assisted search

Measuring performance in LLMO is notoriously difficult because traditional ranking data is incomplete. You are no longer just tracking a position on a page; you are tracking your mention share and citation frequency within a generated response.

Citation and attribution frequency

The gold standard for LLMO is whether the AI cites your domain as a source for its answer. Tools for monitoring LLMO performance focus on how often your brand is mentioned as a recommended solution. If ChatGPT or Perplexity frequently cites your blog post when answering a technical question, you have successfully captured that specific user intent.

Content retrieval and relevance scores

In LLMO testing, we look at retrieval rates. This measures how often an LLM’s Retrieval-Augmented Generation (RAG) system pulls your content into its context window to generate a response. High retrieval rates indicate that your entity-based keyword research is working and the AI sees your content as a factual authority on the topic.

Intent-driven conversion paths

Measuring conversion rates from LLMO traffic requires looking at how users behave once they click through from an AI summary. Often, these users are further down the funnel because the AI has already conducted the preliminary research for them. I recommend using GA4 to segment traffic coming from AI referrers to see if their engagement metrics differ from traditional search. You can combine Google Analytics and Search Console data to see which queries lead to these high-intent AI referrals.

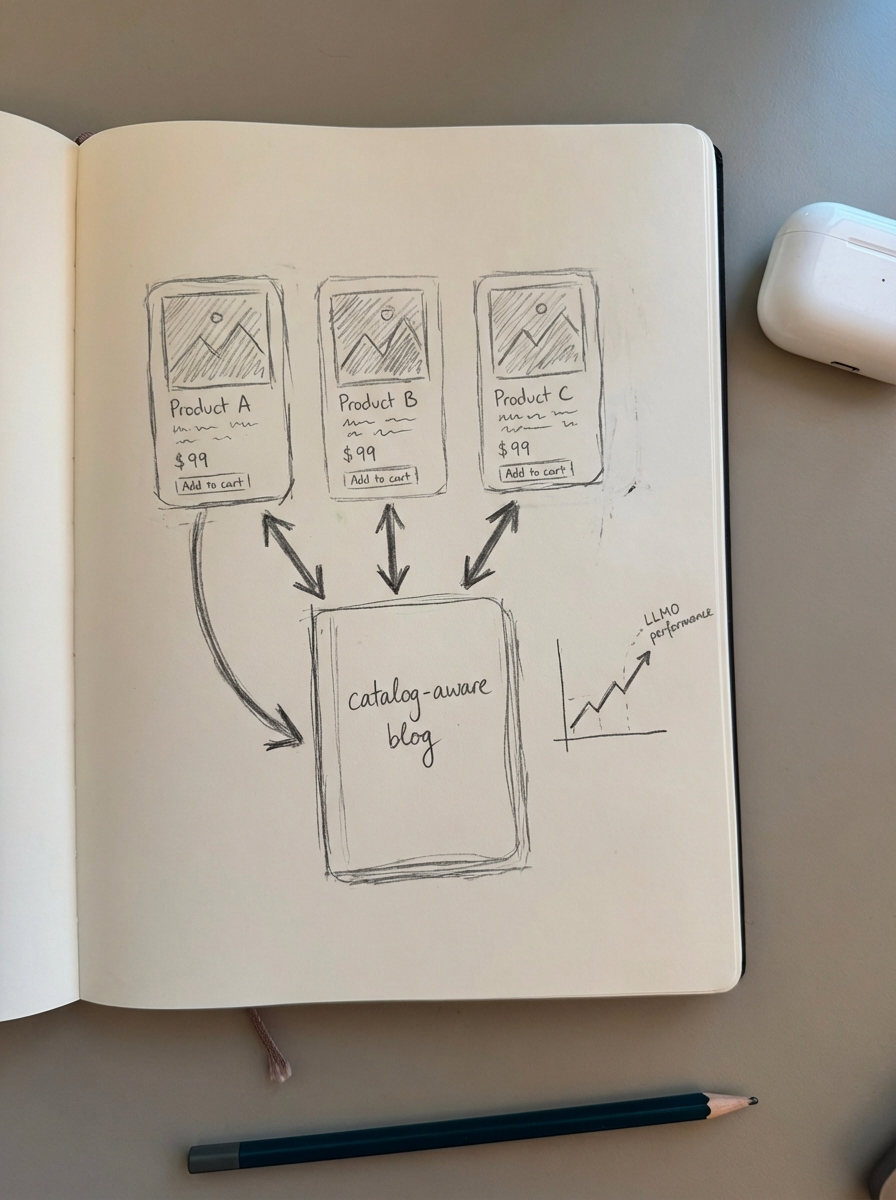

Why WooCommerce stores need catalog-aware intent modeling

Most WooCommerce sites suffer from a data gap. The products exist in the database, but the context – the reasons why a person would buy them – is missing from the blog content. I’ve audited stores where the category pages were just a grid of photos with no context; when we tested these against an LLM, the model couldn’t recommend specific products because the usage scenarios were absent.

Adapting website architecture for LLM search involves more than just adding schema. It requires a blog that is catalog-aware. If your inventory changes, your content should reflect that. For example, if a specific shoe for flat feet goes out of stock, your informational guide should point to the next best alternative that is currently available.

At ContentGecko, we solve this by syncing our content platform directly with your catalog via our WordPress connector plugin. When we plan content, we look for the intent-based questions your customers are asking and match them to your real-time SKUs. This ensures that when an AI retrieves your content, it is serving up accurate, transactional information. You can track this performance through an ecommerce SEO dashboard that breaks down how different page types – like categories and blog posts – perform in this new search landscape.

TL;DR

LLM search transforms user intent from simple keywords into complex, conversational contexts. To measure success, move away from traditional rankings and focus on citation frequency, content retrieval rates, and SEO KPIs specific to AI referrals. For WooCommerce merchants, the key is bridging the gap between product data and educational content through a catalog-synced blog strategy that reflects real-time inventory and expert context.