Measuring the ROI of LLM content and SEO optimization

Most ecommerce leaders treat Large Language Model (LLM) projects as a cost center rather than a strategic performance lever. To justify LLM spend to stakeholders, you must move beyond tracking “tokens used” and start measuring the delta between manual production costs and the incremental revenue driven by AI-mediated discovery.

The market shift is already here. Traditional search volume is projected to decline by 25% by 2026, while AI-generated answer traffic has seen a 1,200% increase in less than a year. If you are still evaluating your SEO budget based solely on blue links in Google, you are missing the most significant shift in digital commerce since the smartphone. I have found that the merchants who win in this environment are those who stop viewing AI as a “cool tool” and start viewing it as a fundamental shift in their customer acquisition cost (CAC) structure.

The framework for measuring LLM ROI

In my experience, the biggest mistake WooCommerce merchants make is failing to account for the “human-in-the-loop” costs. You cannot simply look at an OpenAI or Anthropic invoice and call it your total cost of ownership. A pragmatic ROI calculation must include three distinct pillars that address both efficiency and top-line growth.

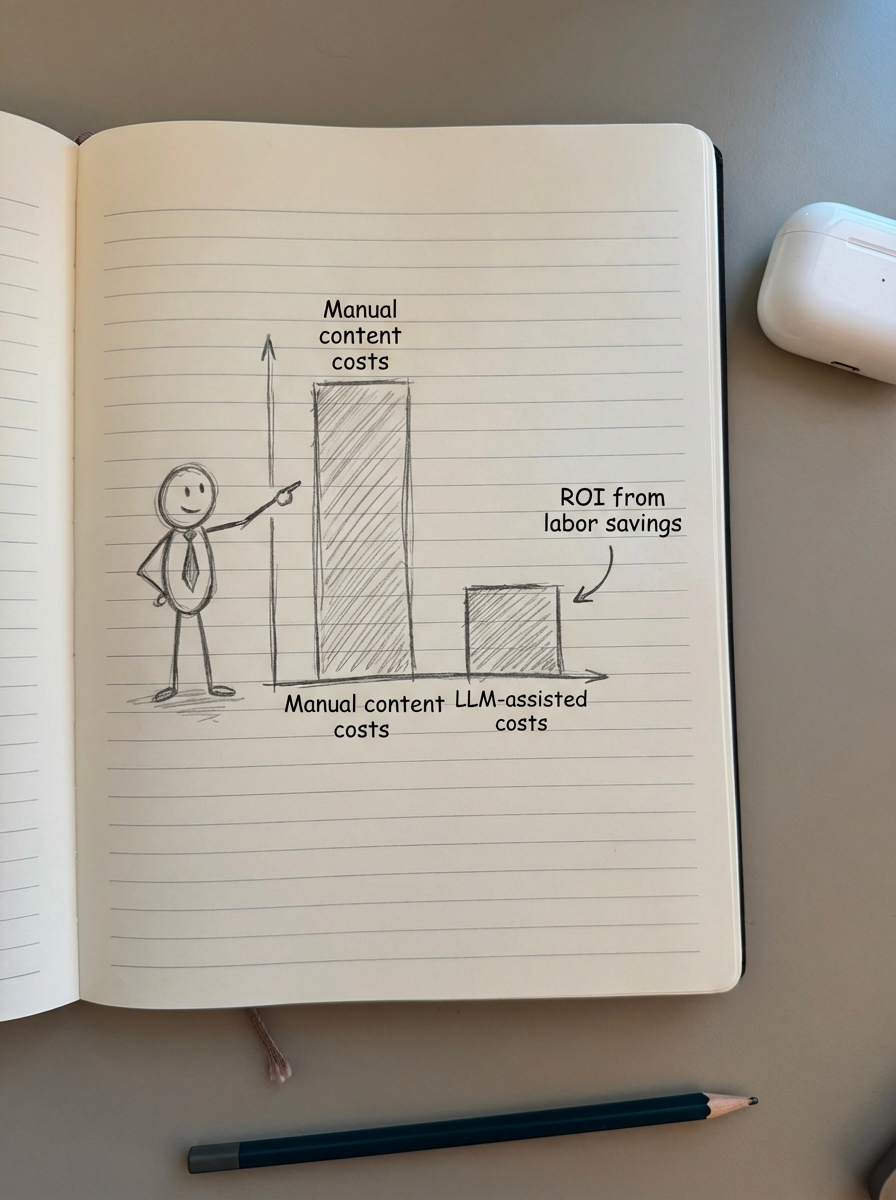

Labor efficiency and content velocity

The most immediate ROI from SEO content automation is the radical reduction in manual overhead. Businesses implementing optimized LLM workflows report a 73% decrease in manual content work and a 50-70% reduction in content creation time.

You should calculate your “Base Labor Cost” – the hours required to write a product guide multiplied by your team’s hourly rate – versus your “LLM Augmented Cost,” which includes inference fees plus roughly 15 minutes of human review. For most of our clients, this flip alone justifies the technology spend before a single visitor hits the site. We often see teams transition from publishing five articles a month to over fifty without adding a single headcount.

AI visibility and citation rates

In the era of Generative Engine Optimization (GEO), traditional rankings are being replaced by “citations.” You need to track how often your product data or blog content is being pulled into answers by Perplexity, ChatGPT, or Google’s AI Mode.

Our data shows that merchants using AI-generated product schema markup see a 23% higher click-through rate in product-rich results. If your content isn’t properly formatted for LLM retrieval, you are effectively invisible to the 58% of US consumers who now rely on AI for product recommendations. This isn’t just about traffic volume; it is about brand authority in the spaces where your customers are actually asking questions.

Revenue lift and conversion

Ultimately, stakeholders care about the bottom line. Research indicates that LLM-driven SEO strategies can drive a 3–15% revenue uplift. This lift isn’t just from new traffic; it’s the result of better intent matching. When an LLM explains why your specific SKU solves a user’s problem, the traffic arriving at your WooCommerce store is pre-qualified. Unlike traditional search, which often delivers “window shoppers,” AI-mediated discovery tends to deliver users who are further down the purchase funnel.

Optimization levers to improve your margins

Once you have established a baseline ROI, you can pull several technical and strategic levers to improve your margins. In our internal testing at ContentGecko, we’ve found that “bigger” is rarely “better” when it comes to model choice for specific ecommerce tasks.

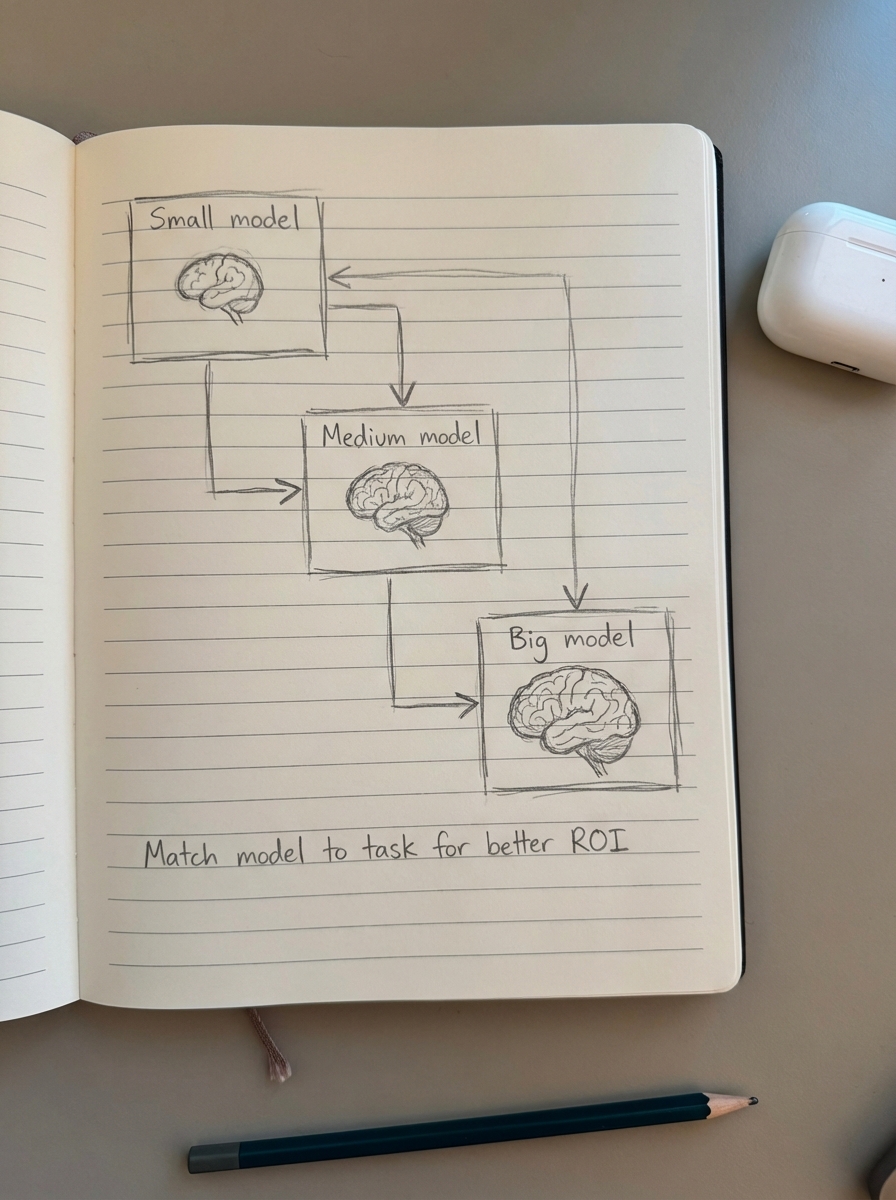

Model size and inference costs

Using a massive model like Claude 3.5 Sonnet for every simple meta description is an ROI killer. For high-volume technical tasks – like generating alt text for 10,000 SKUs or basic product summaries – smaller, open-source variants like LLaMA 3 provide 90% of the quality at a fraction of the cost. I always recommend matching the model’s “reasoning power” to the complexity of the task to avoid burning budget on over-engineered outputs.

RAG vs. Fine-tuning

For WooCommerce stores, Retrieval-Augmented Generation (RAG) is almost always a better ROI play than fine-tuning. Fine-tuning is expensive, requires deep technical expertise, and becomes obsolete the moment your inventory changes or a product goes out of stock. RAG allows the LLM to “read” your current catalog data in real-time. This catalog-synced approach ensures that a blog post about “best coffee makers” doesn’t link to a discontinued item. It keeps your content accurate and your conversion paths open without manual intervention.

Prompting and architecture

Optimization isn’t just about the model; it’s about the context window. Feeding an LLM too much irrelevant data increases token costs and leads to hallucinations. By structuring your data with clean schema and intent-based clusters, you reduce the “reasoning” the model has to do. This lowers latency, improves output quality, and directly reduces the cost per page produced.

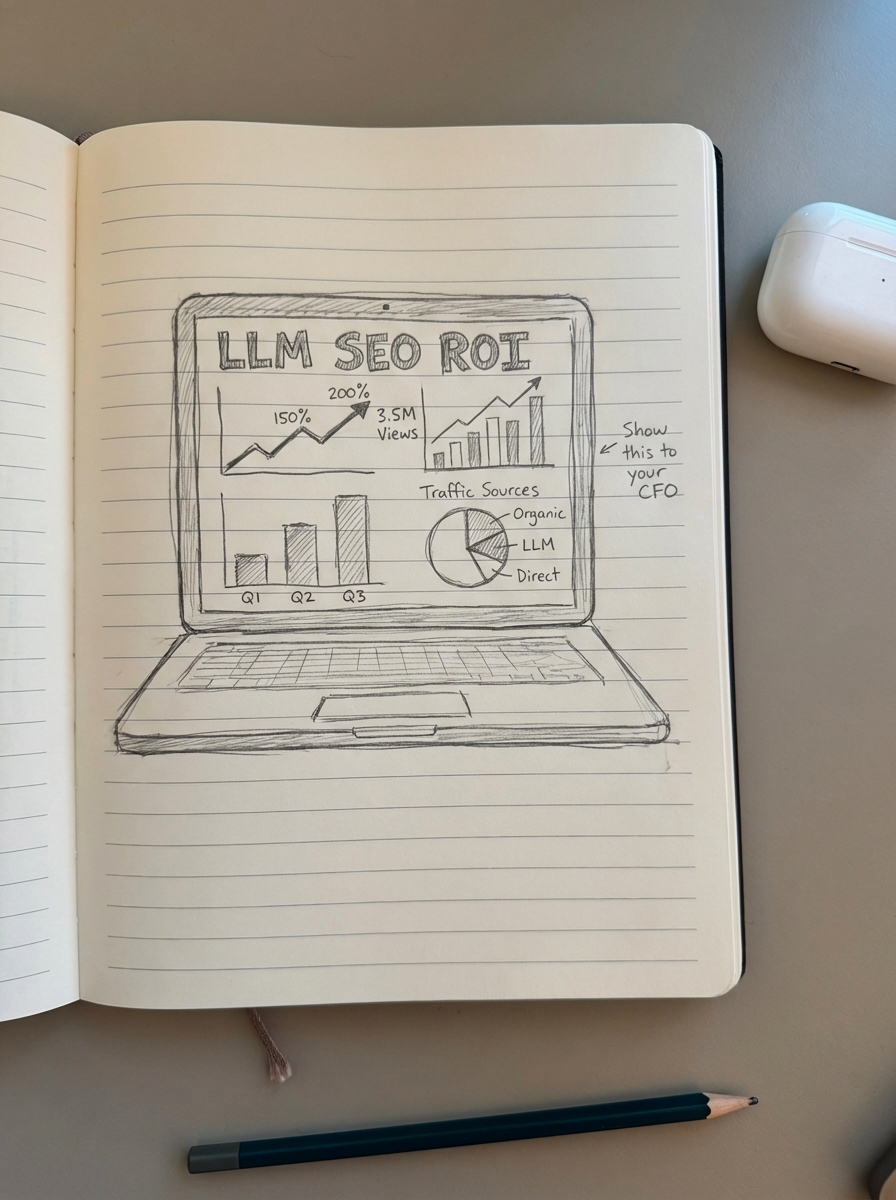

Justifying LLM spend to stakeholders

To get sign-off on larger SEO automation budgets, you must speak the language of the CFO by presenting a clear “Investment Curve.” Ecommerce SEO ROI typically reaches 2.6x at 12 months and 5.2x after three years. When justifying the spend, emphasize that AI is not an “add-on” but a replacement for aging, inefficient processes.

- The Defensive Argument: If we don’t optimize for AI search, we risk losing the 34% of clicks currently being absorbed by AI overviews and generative answers.

- The Efficiency Argument: We can increase our content output 10x while reducing our per-page cost by 80%, effectively decoupling our growth from our headcount.

- The Conversion Argument: Organic customers show 20-30% higher lifetime value than paid channels. Automating this at scale is the most cost-effective customer acquisition channel available to us.

How ContentGecko scales your ROI

The manual coordination of LLM prompts, WooCommerce product data, and SEO monitoring is where most internal projects fail. ContentGecko was built to solve this by providing a direct WooCommerce integration that automates the entire loop from planning to publishing.

Instead of paying a team to manually update blog posts when prices change or products go out of stock, our platform syncs with your catalog to ensure every piece of content remains a conversion asset. You can track all of this through our Ecommerce SEO Dashboard, which breaks down performance by category and product type. This level of granularity allows you to see the real-world ROI of your automation efforts in real-time, making it easy to report successes back to your stakeholders.

TL;DR

Measuring LLM ROI requires looking at labor savings (often 70%+), AI citation rates, and revenue per session rather than just token costs. You can improve your margins by choosing smaller, task-specific models and using RAG for catalog accuracy. Justify the investment by showing how automation scales your highest-LTV channel while protecting your brand against the projected 25% decline in traditional search traffic.